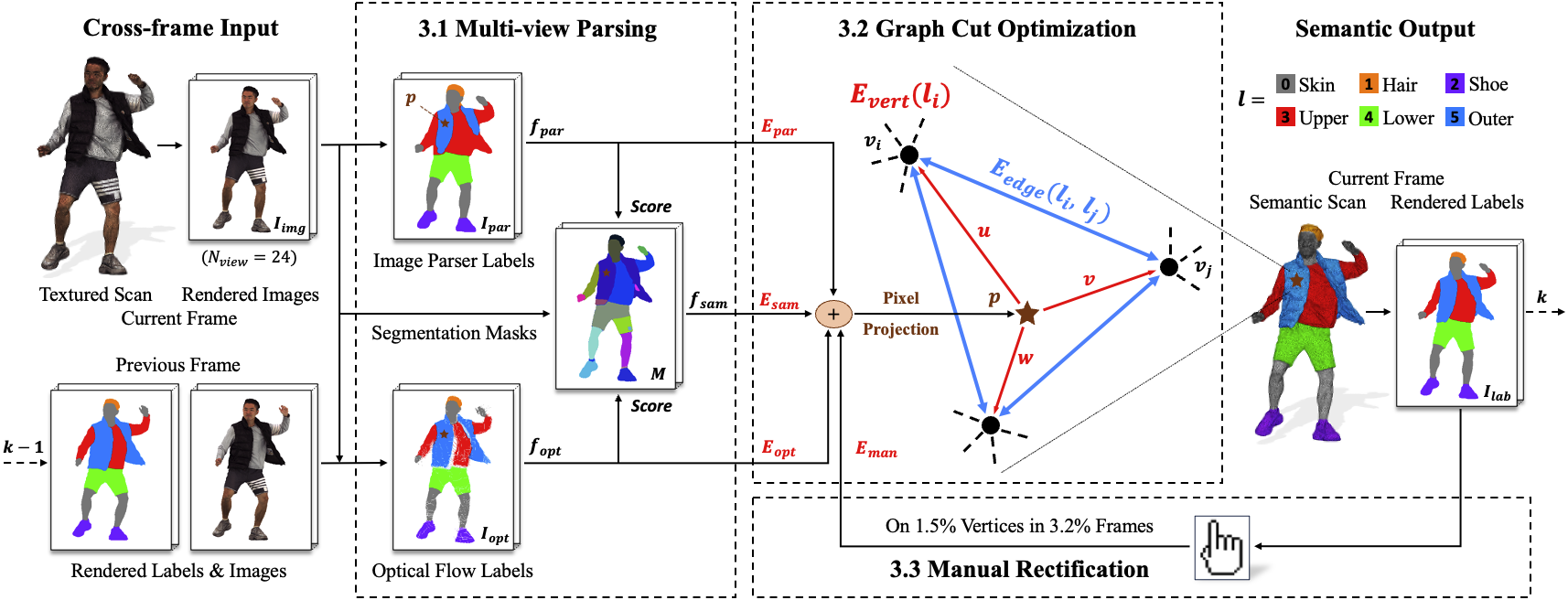

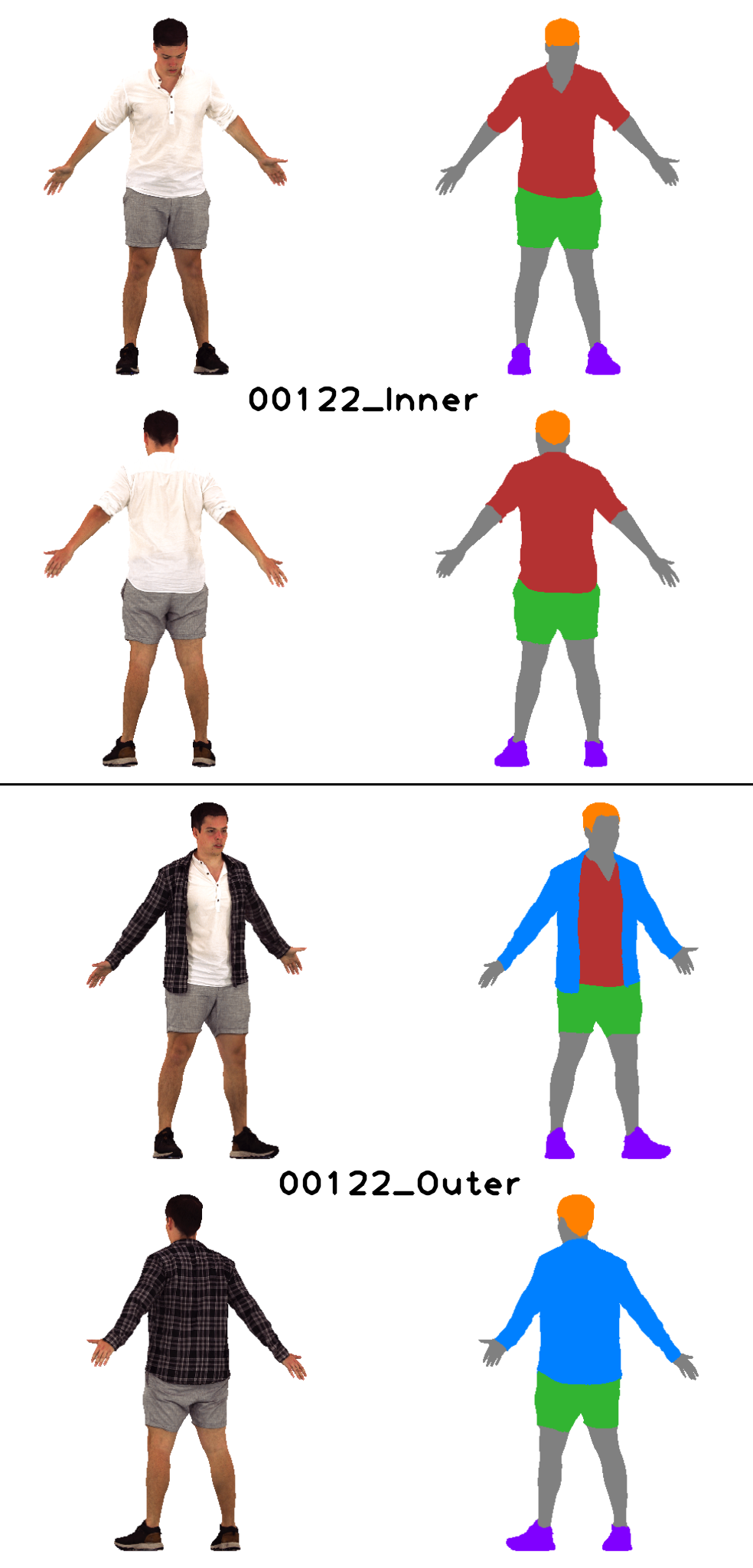

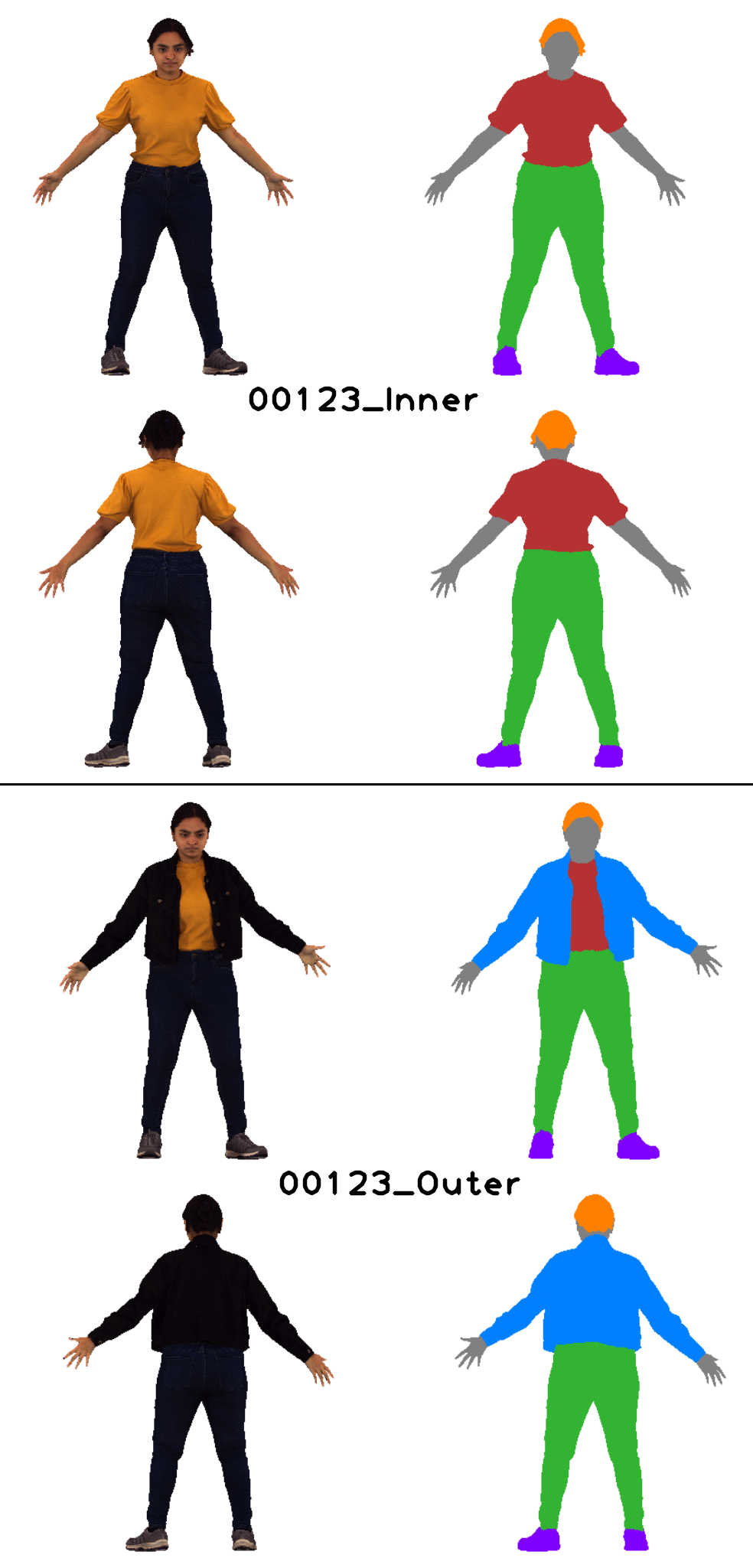

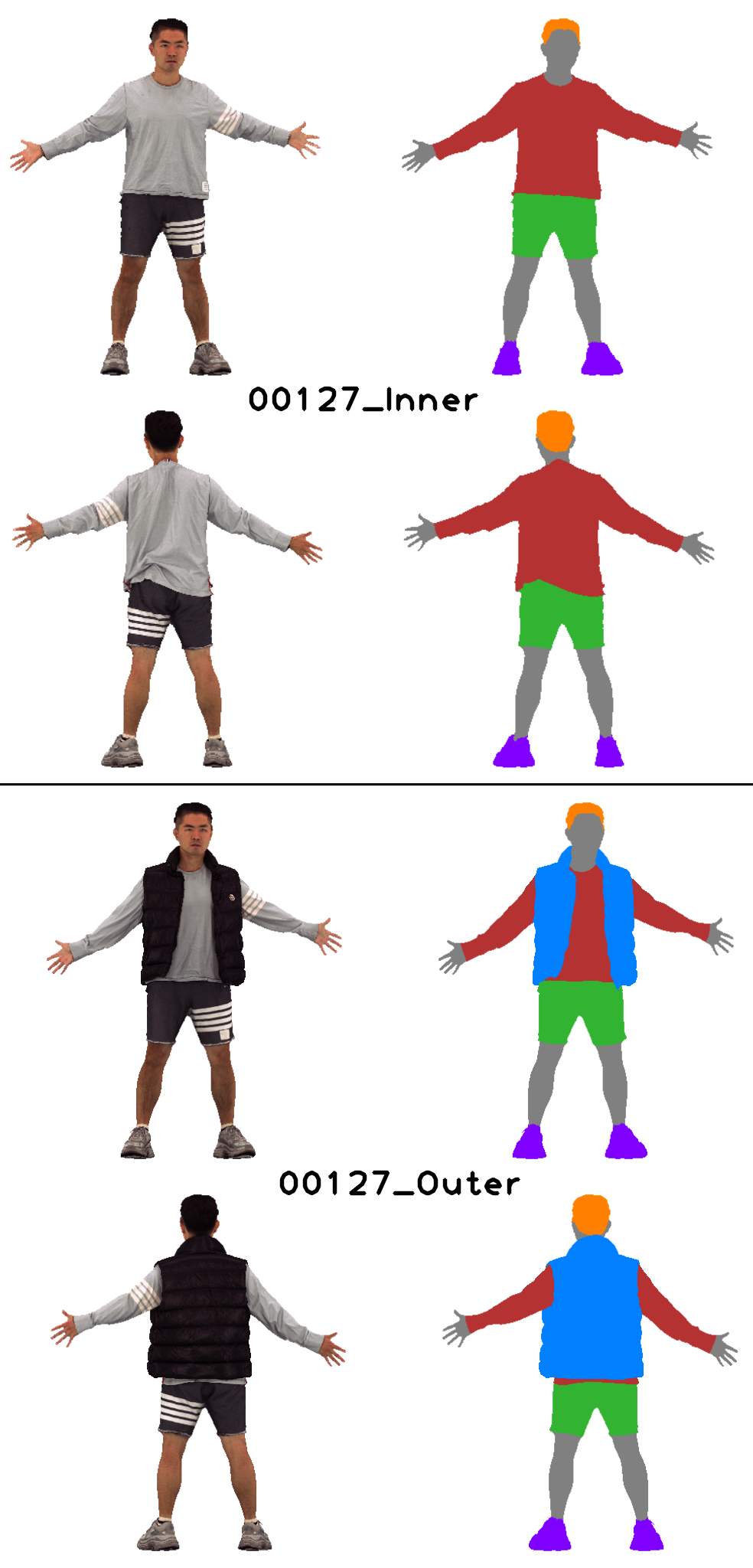

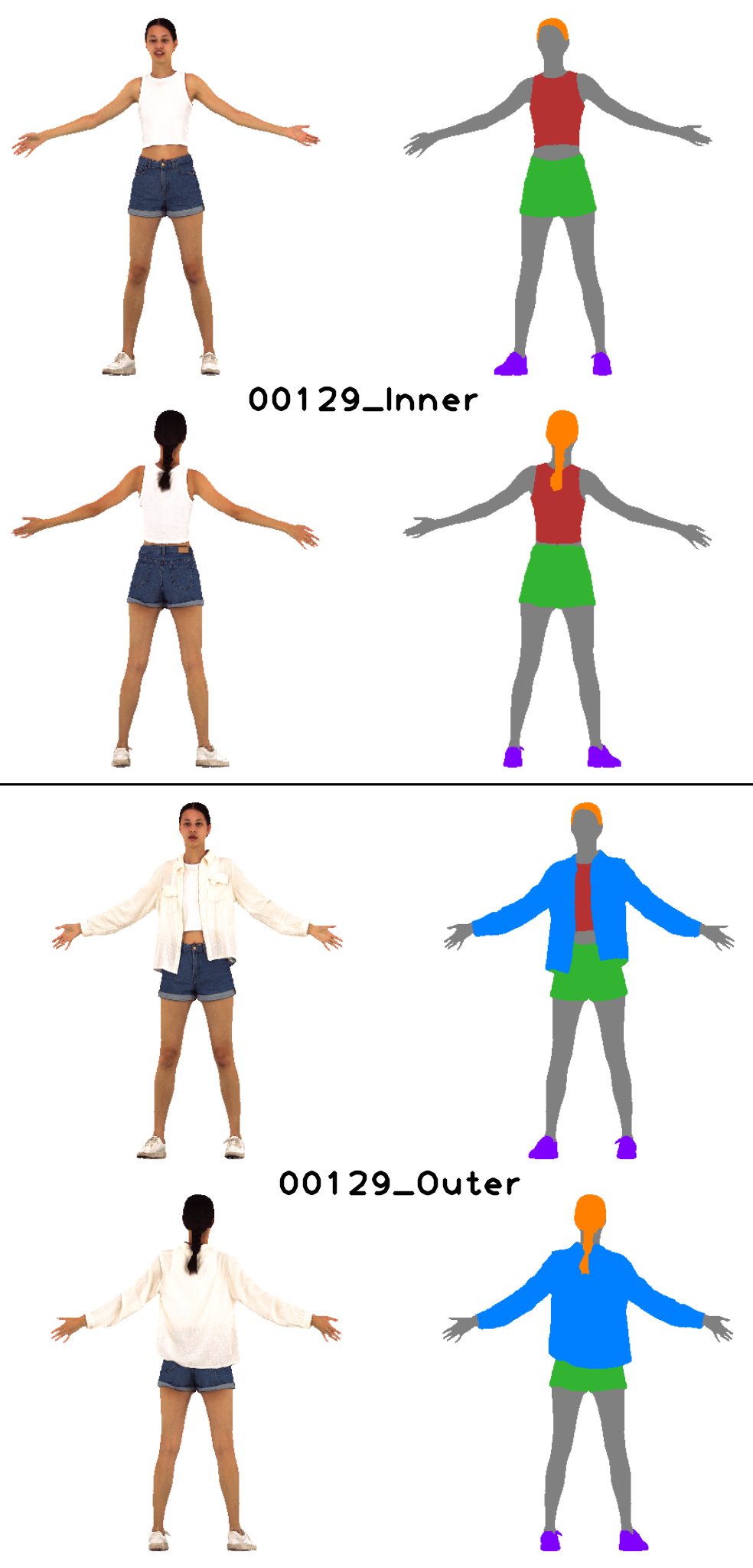

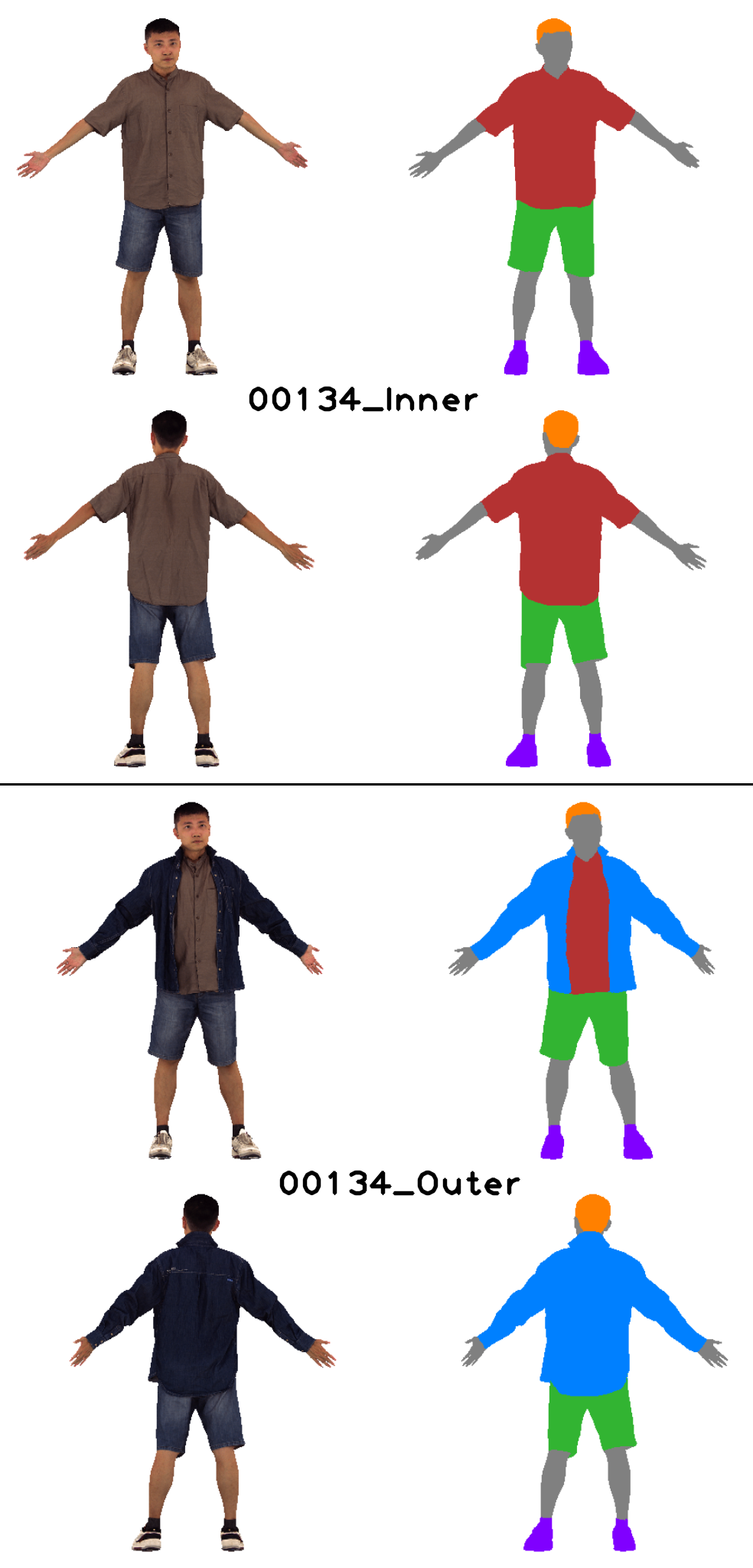

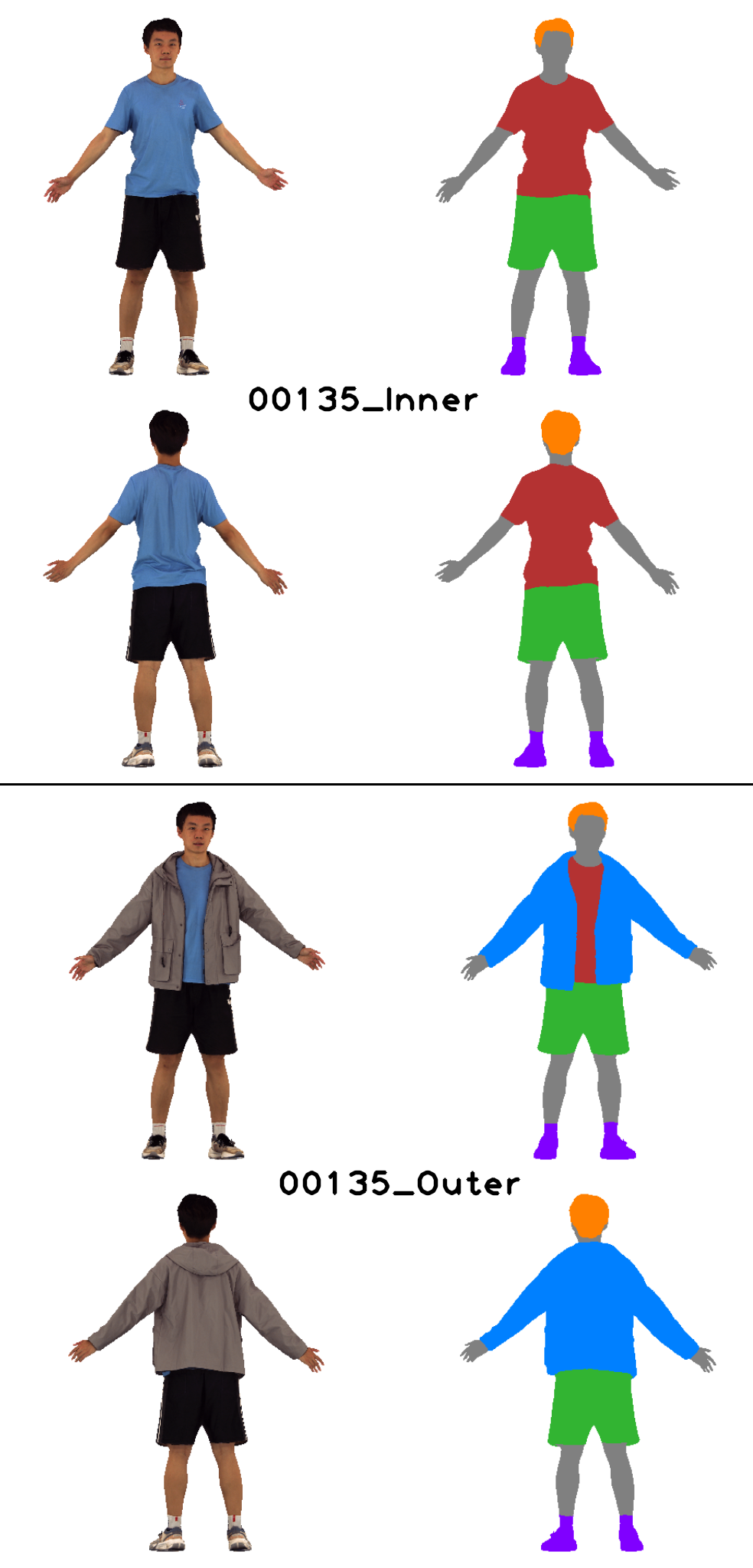

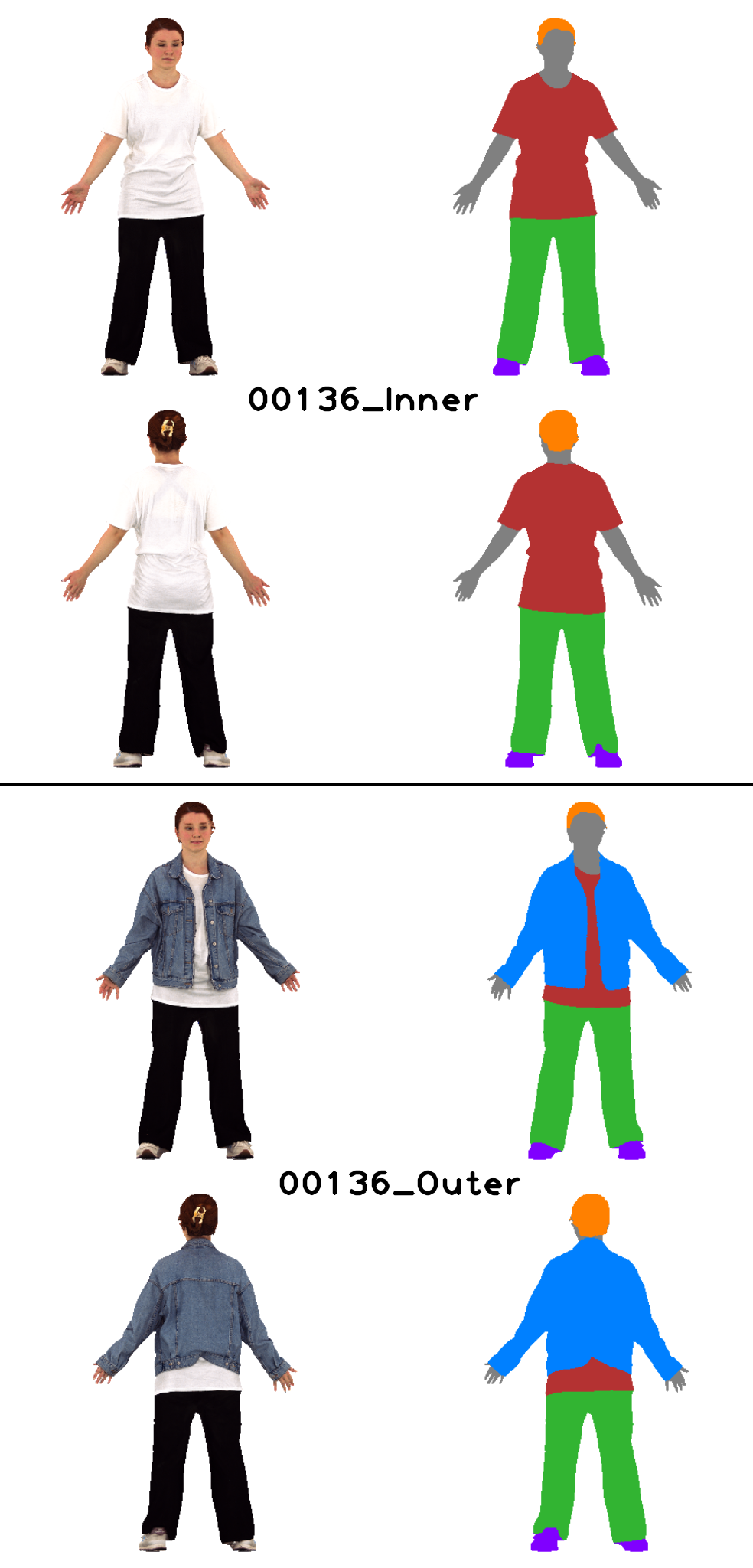

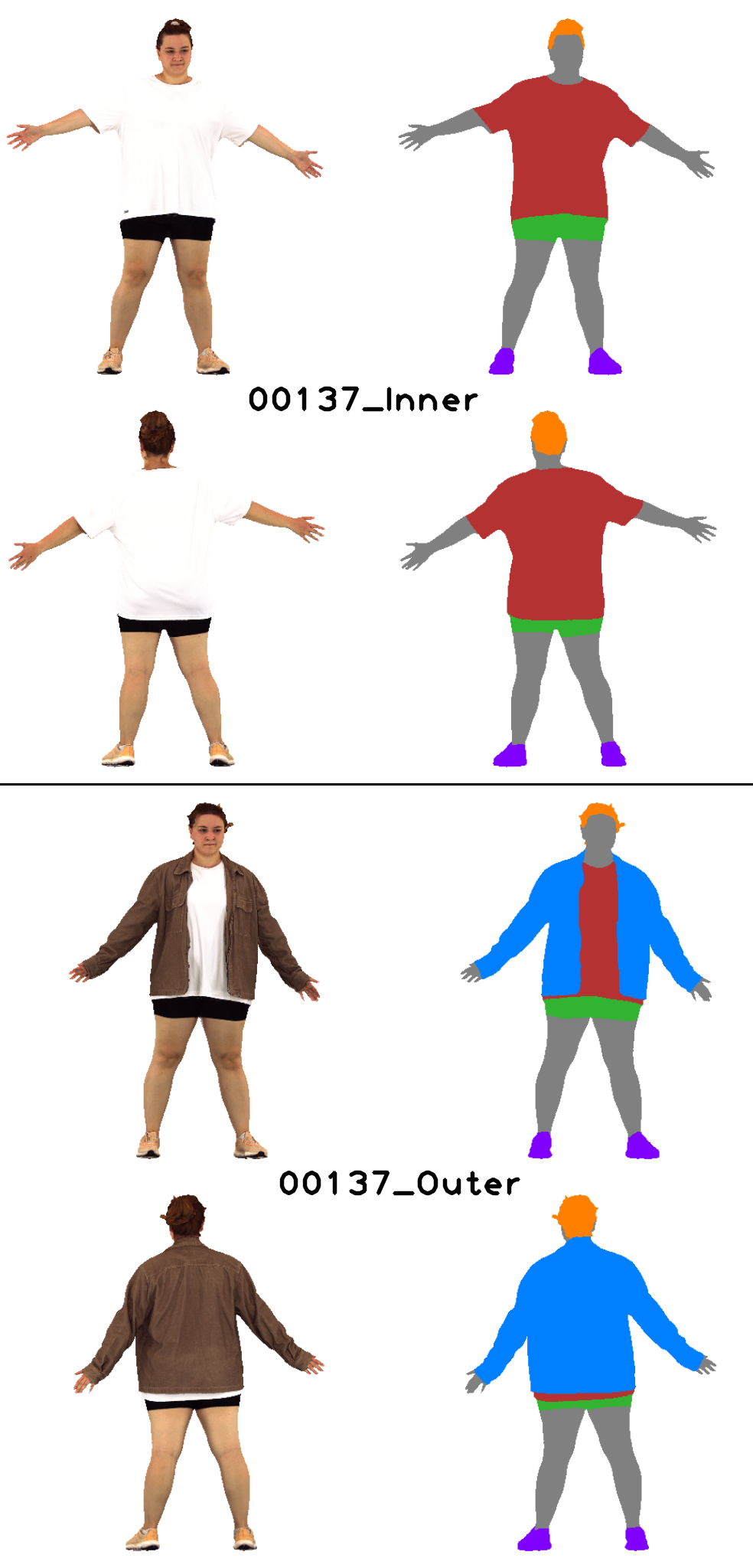

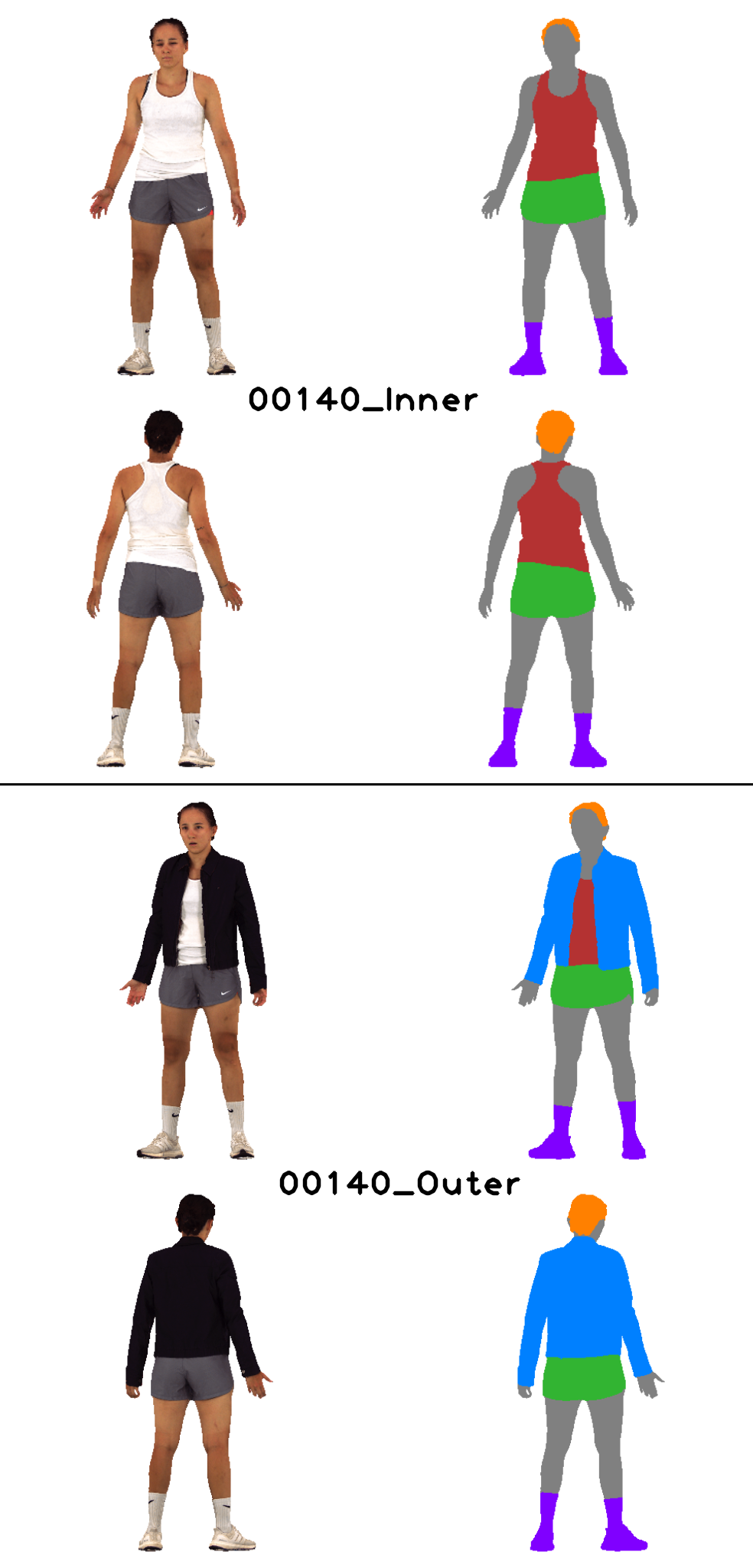

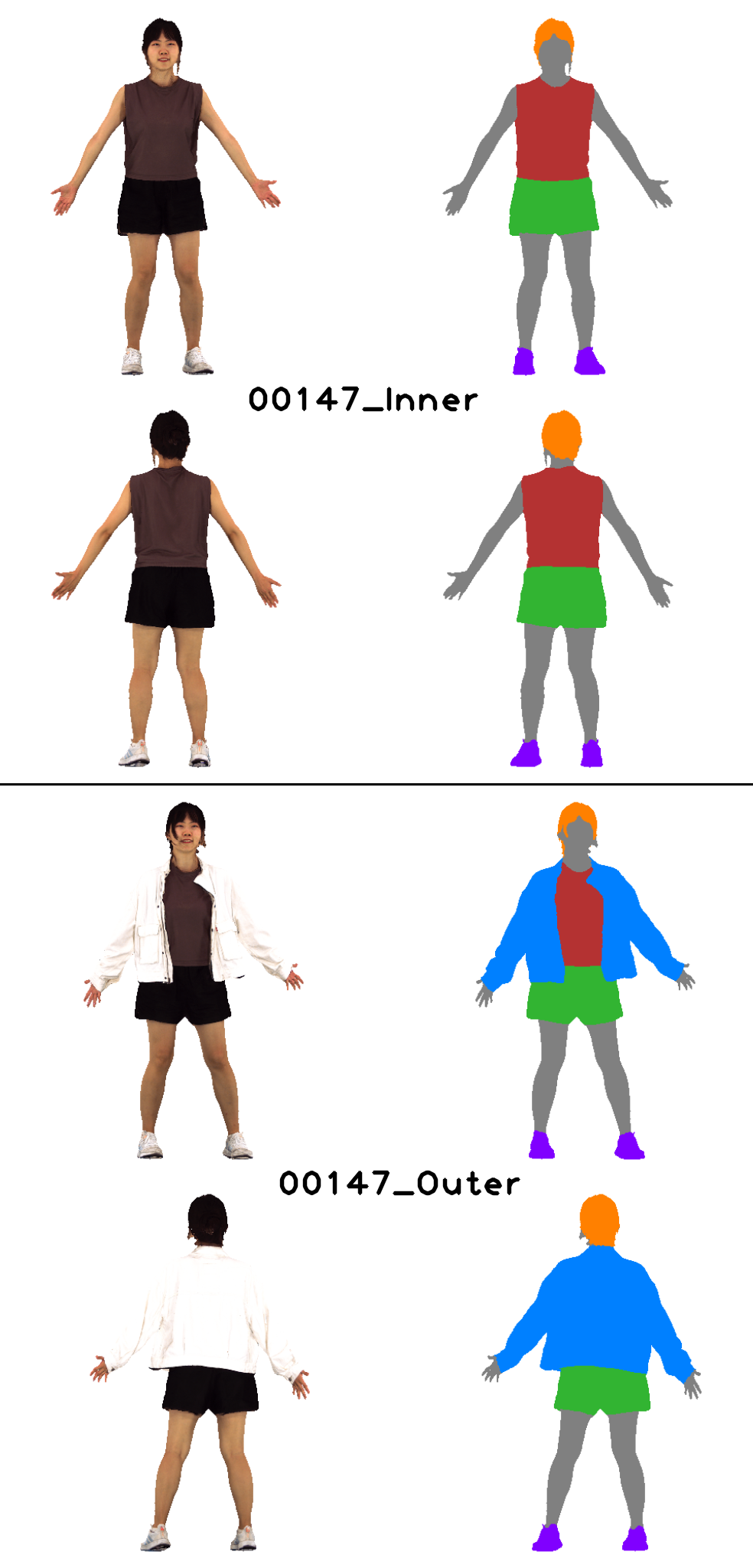

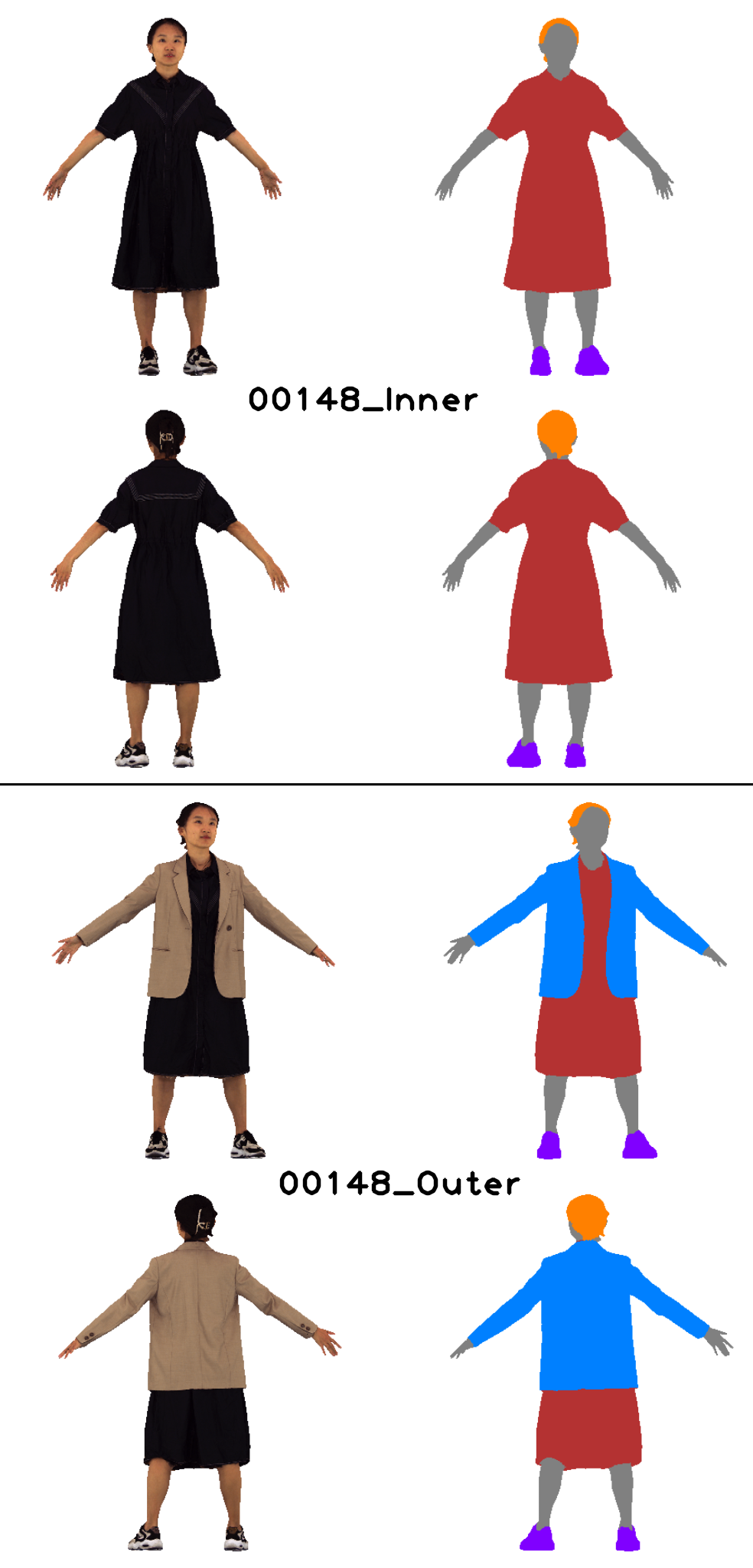

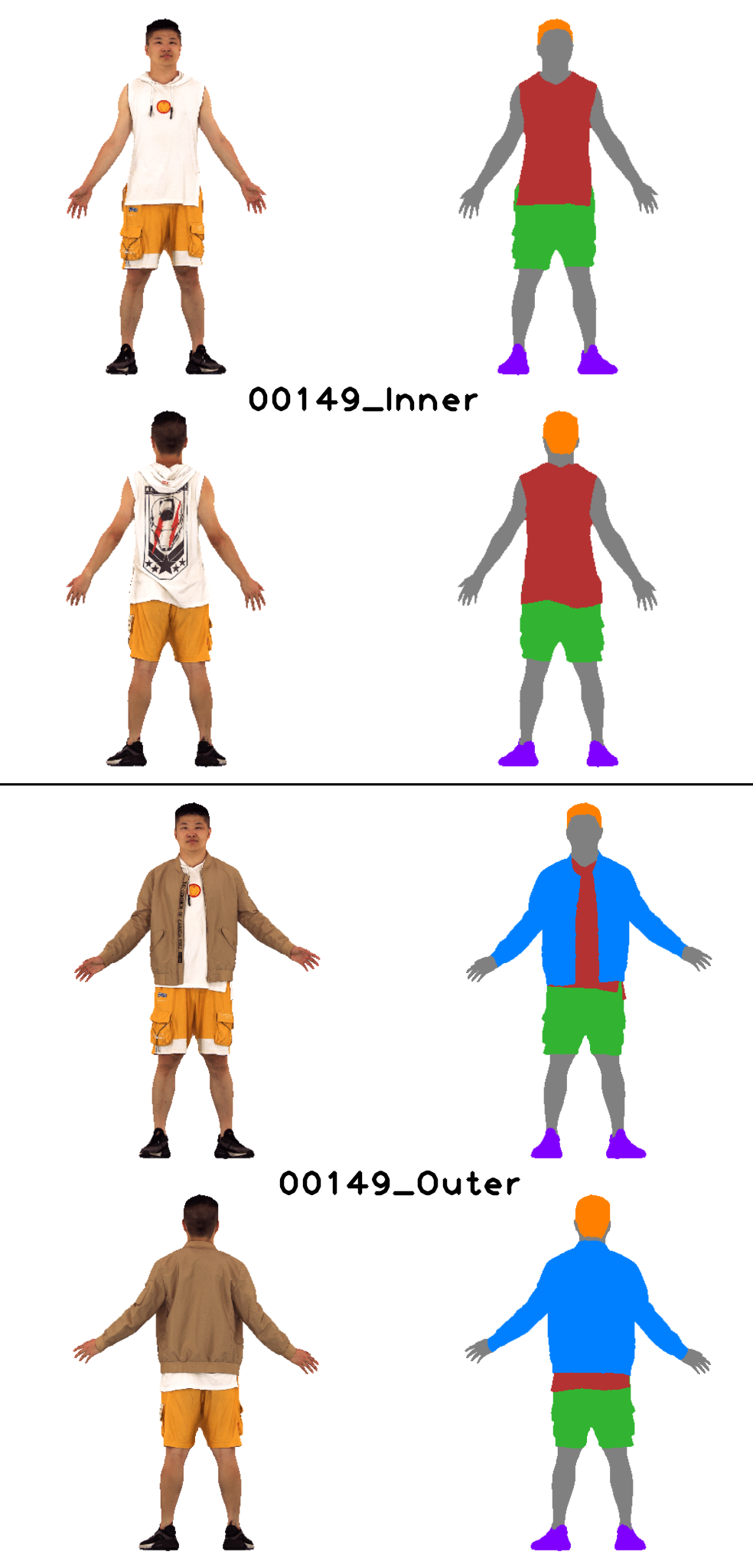

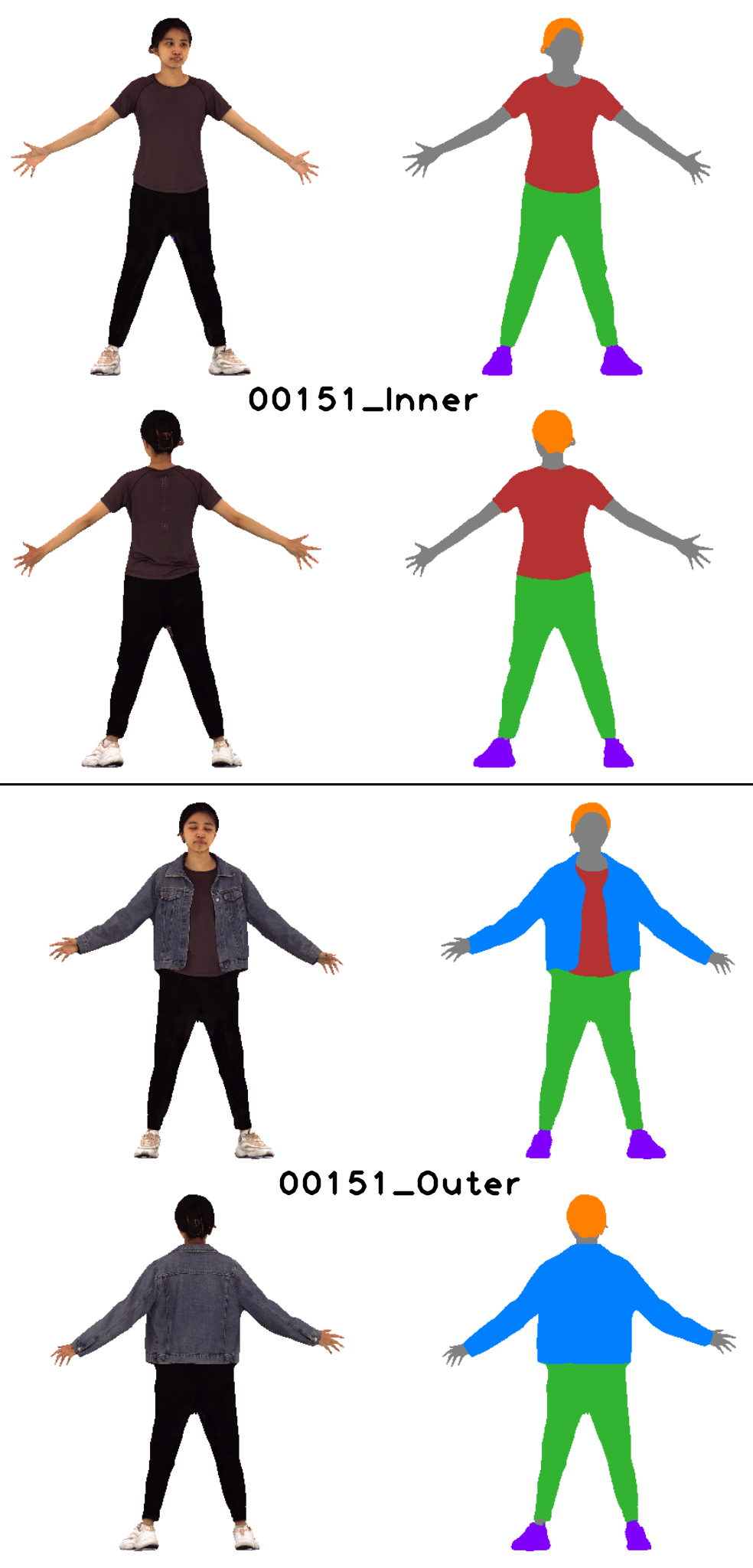

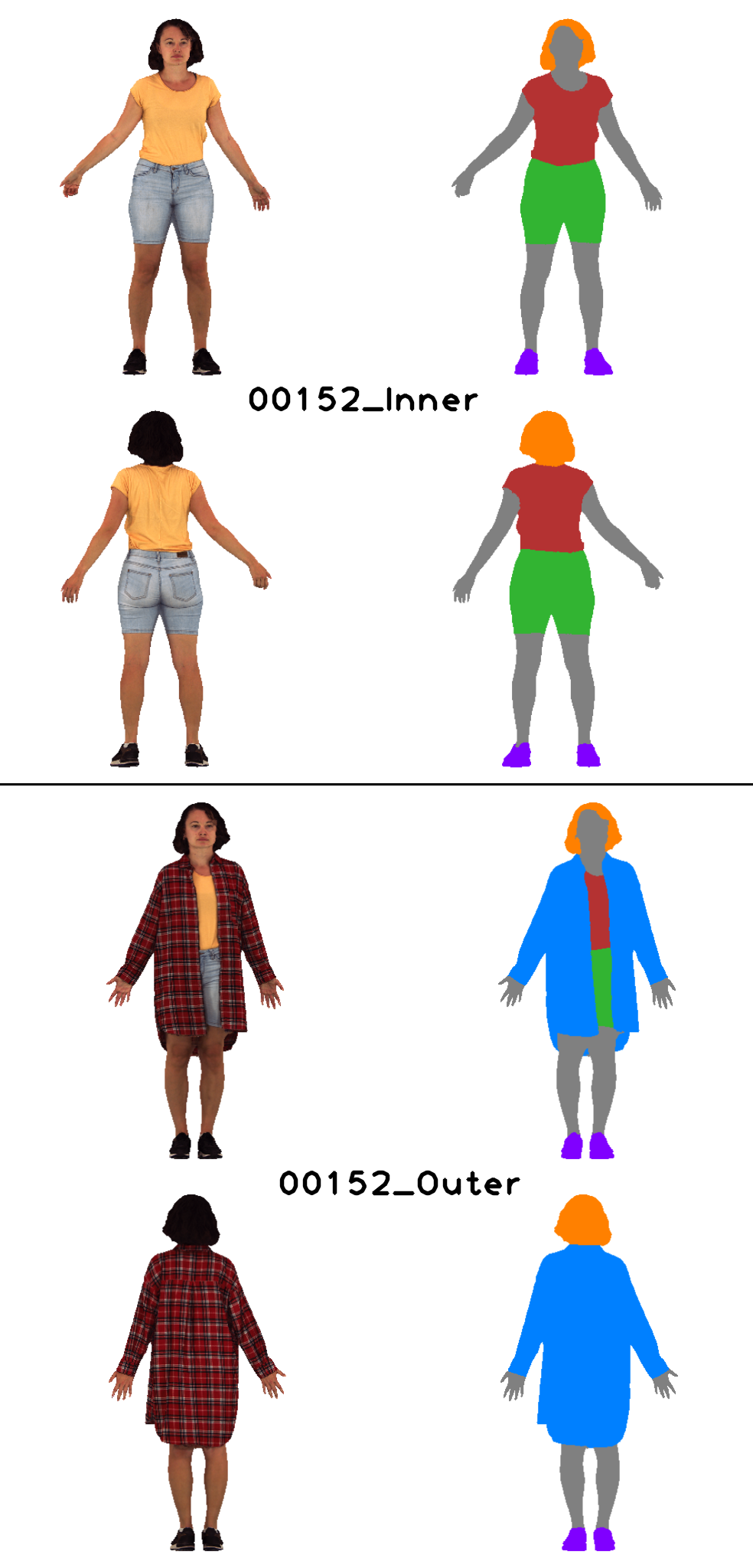

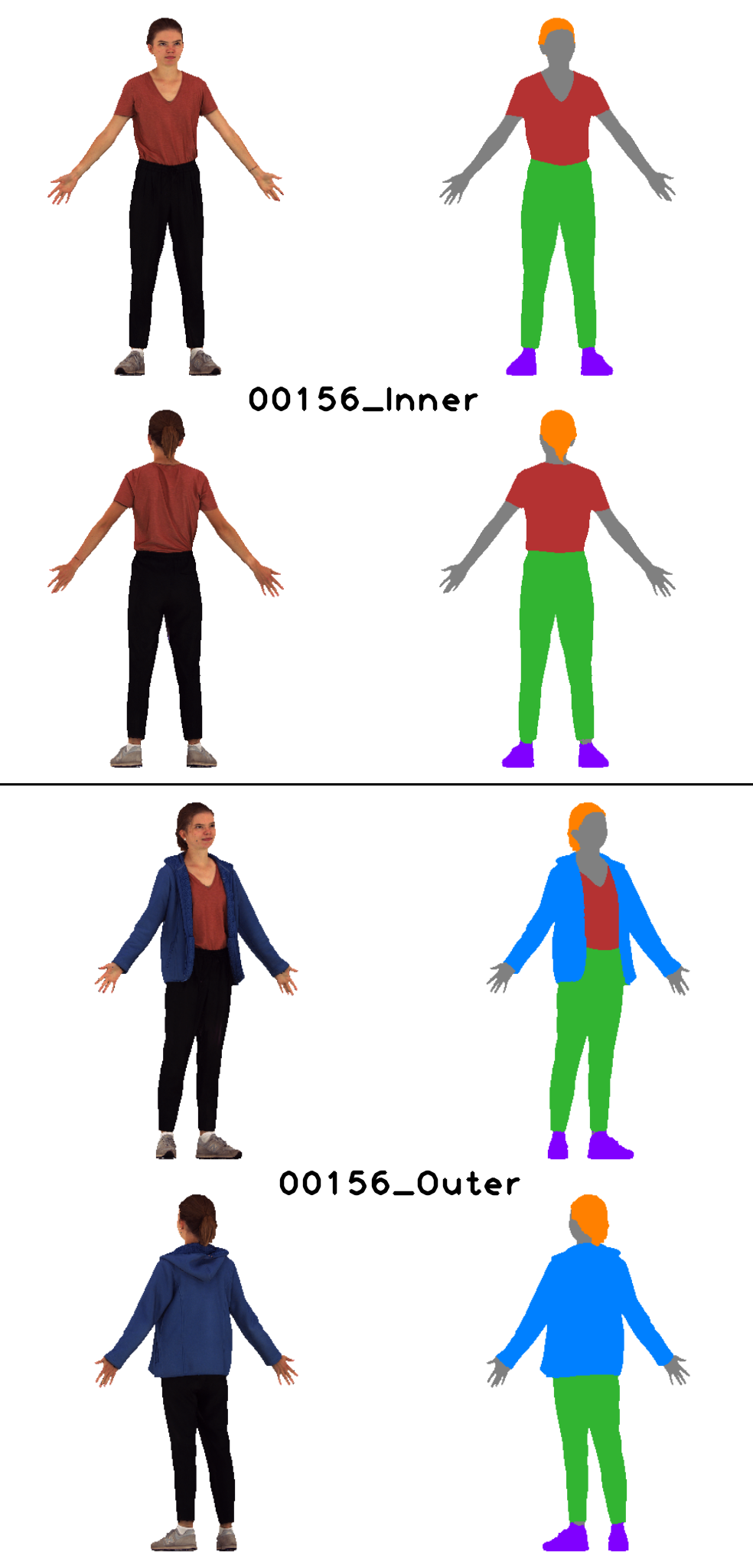

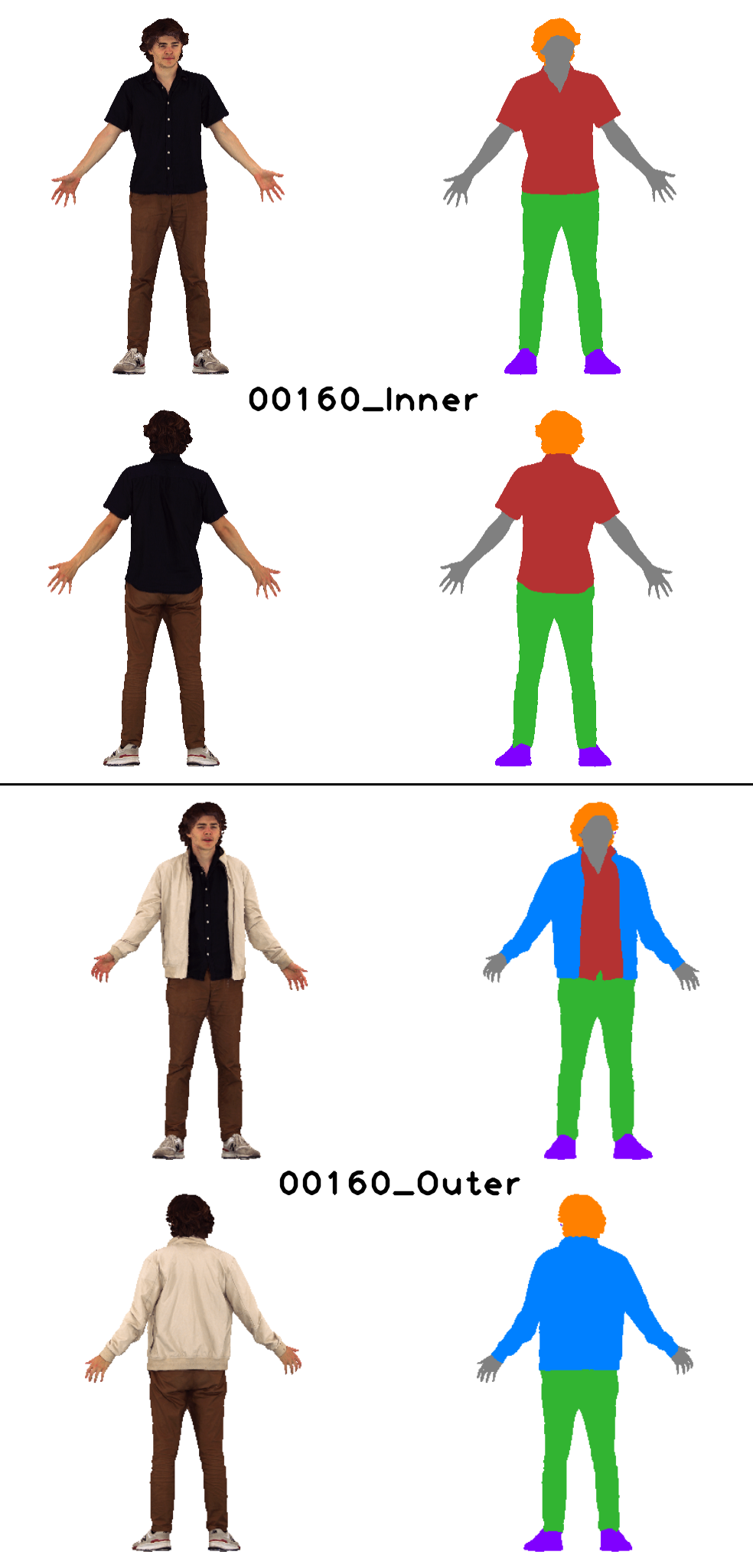

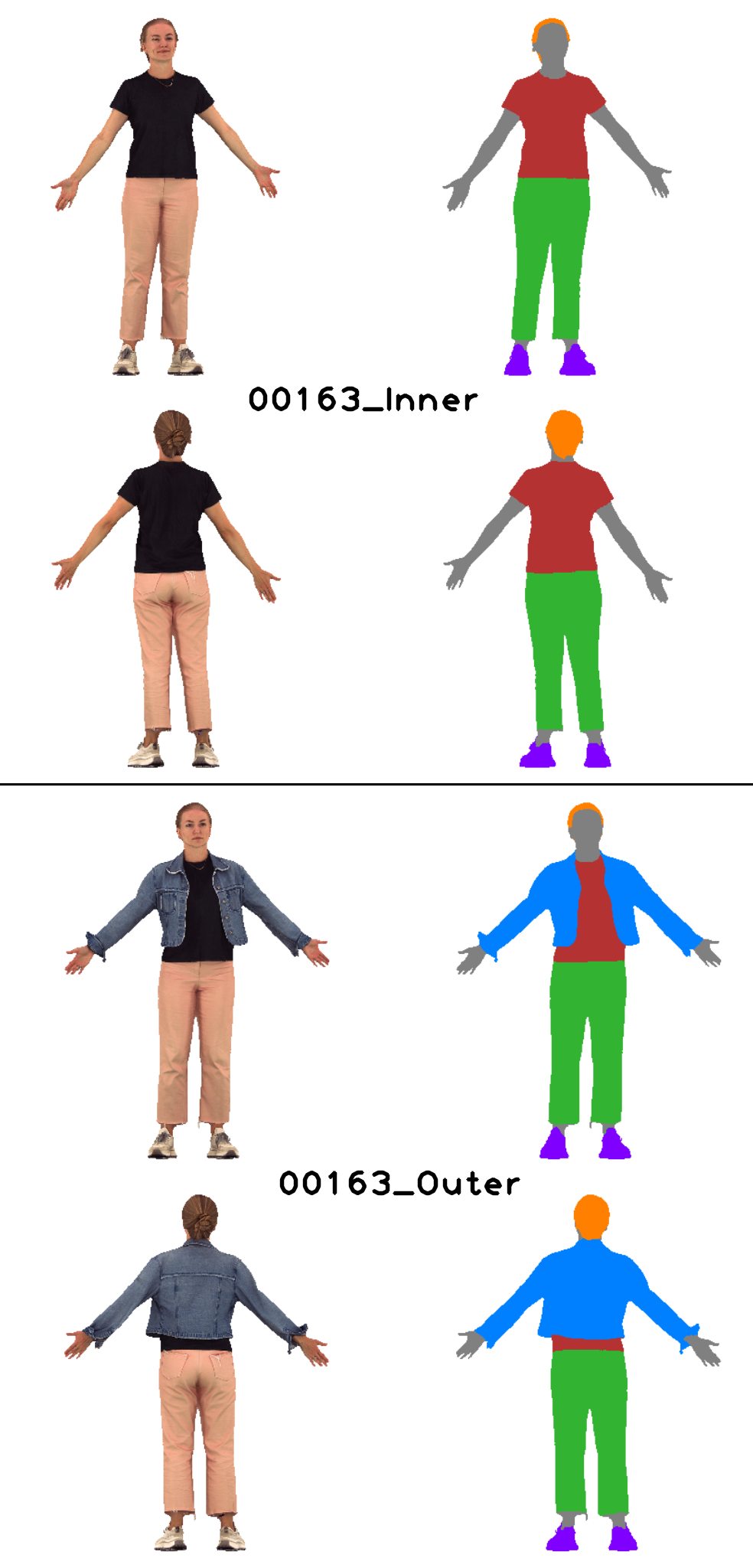

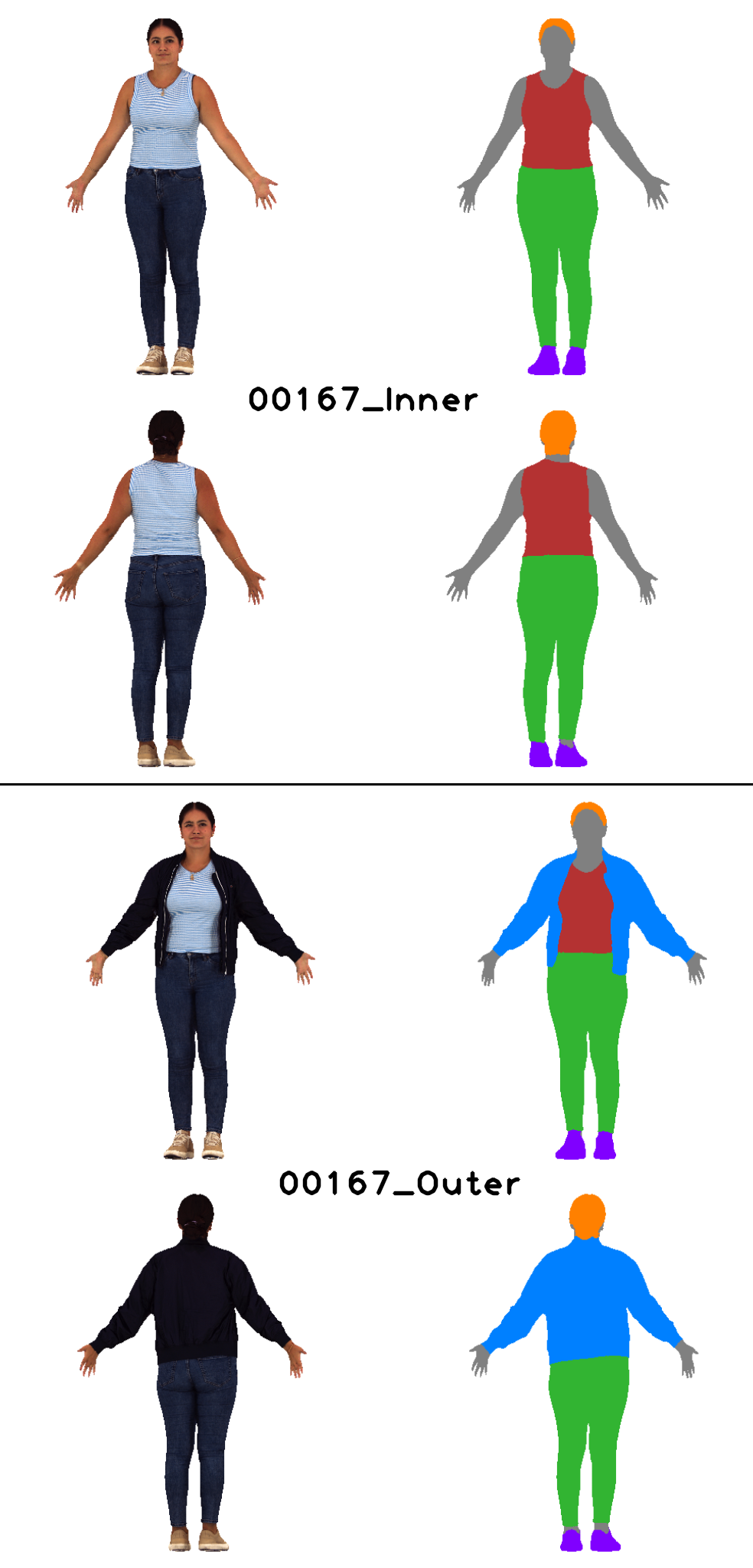

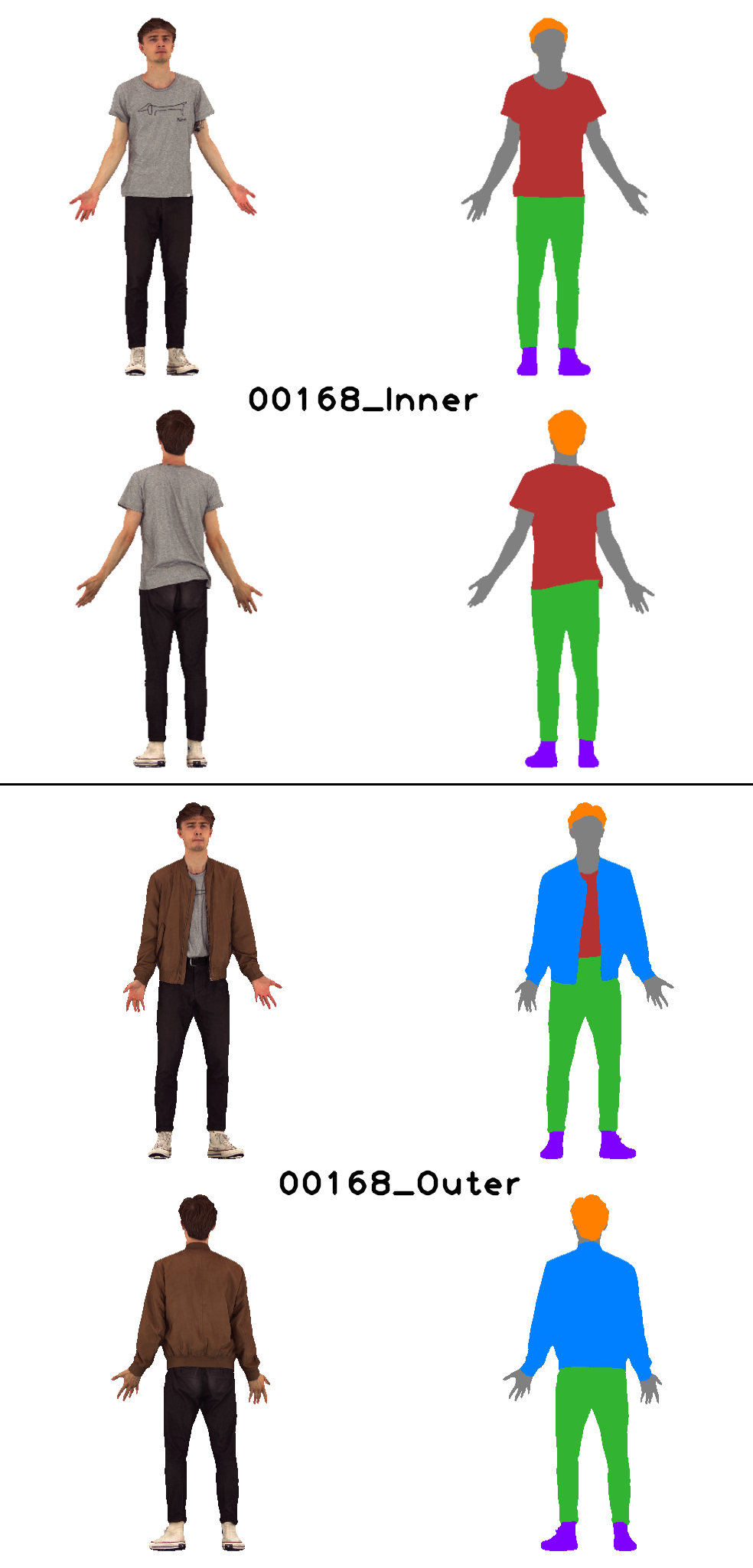

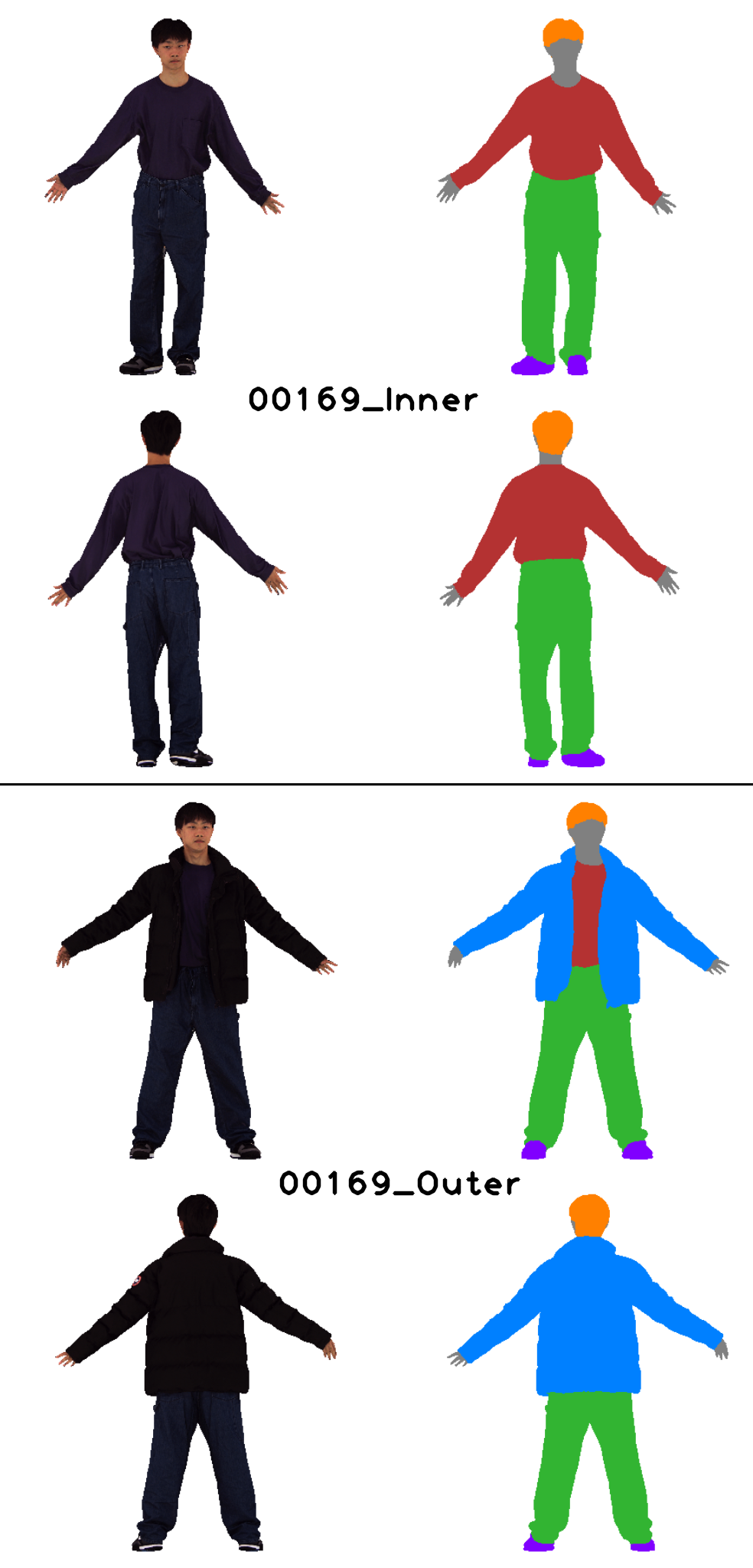

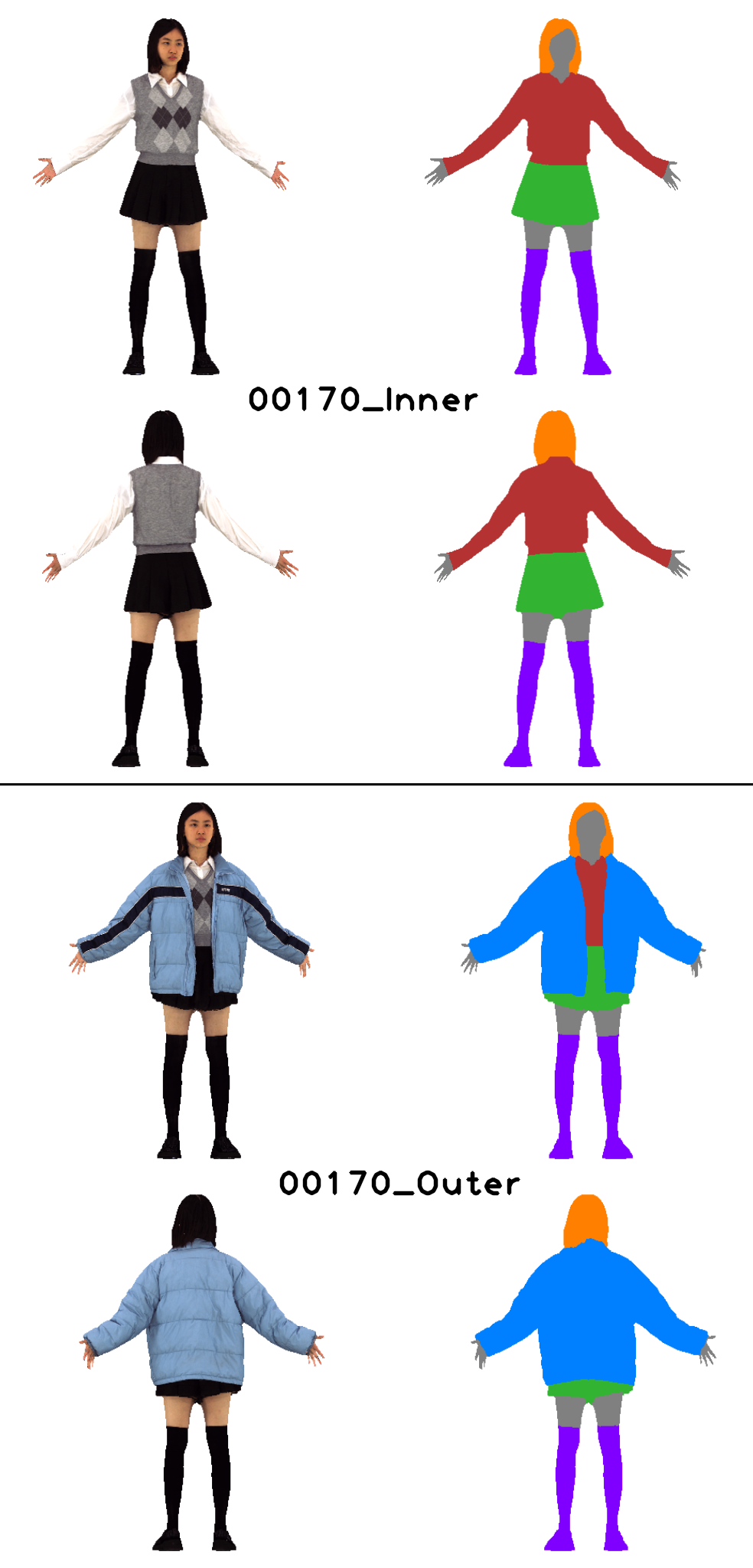

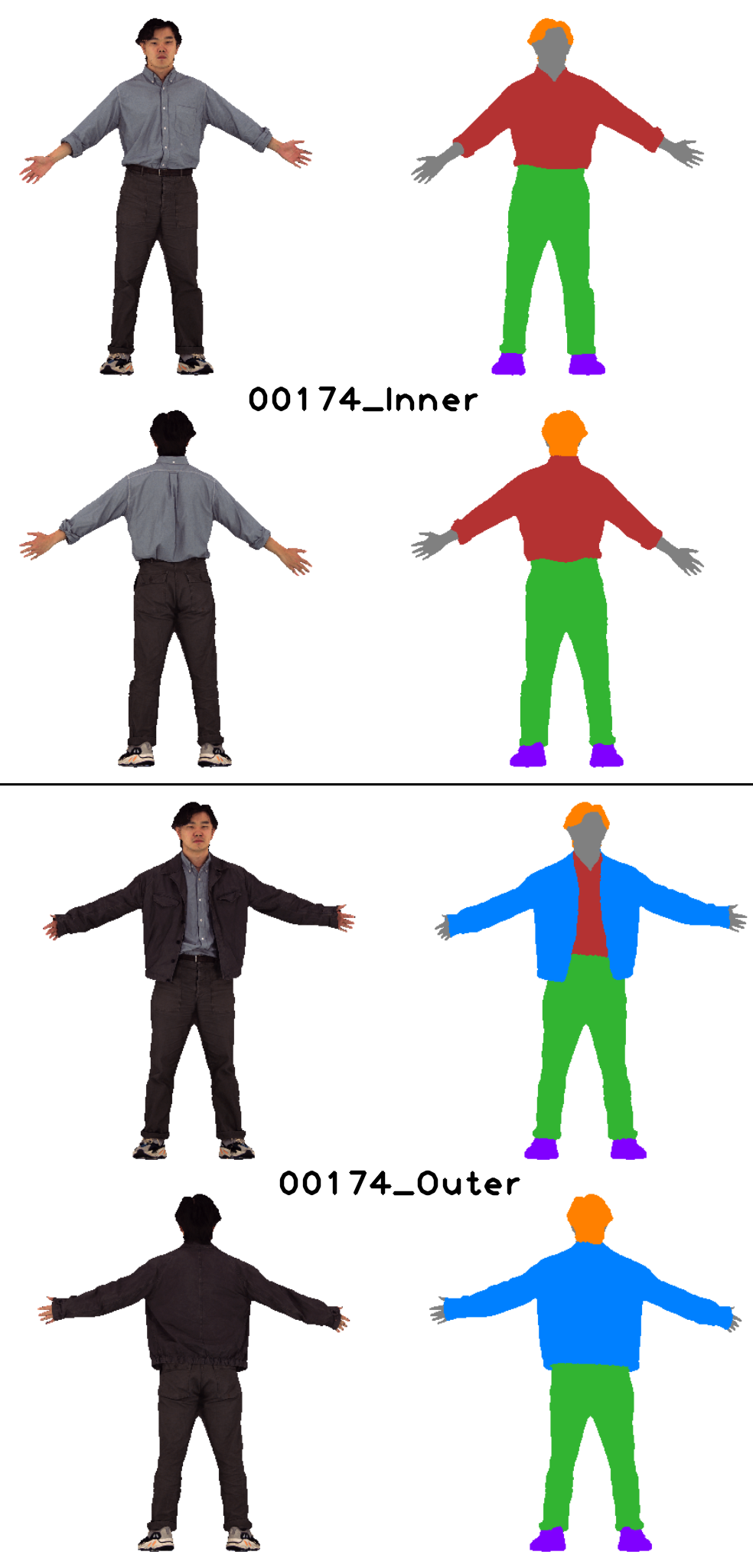

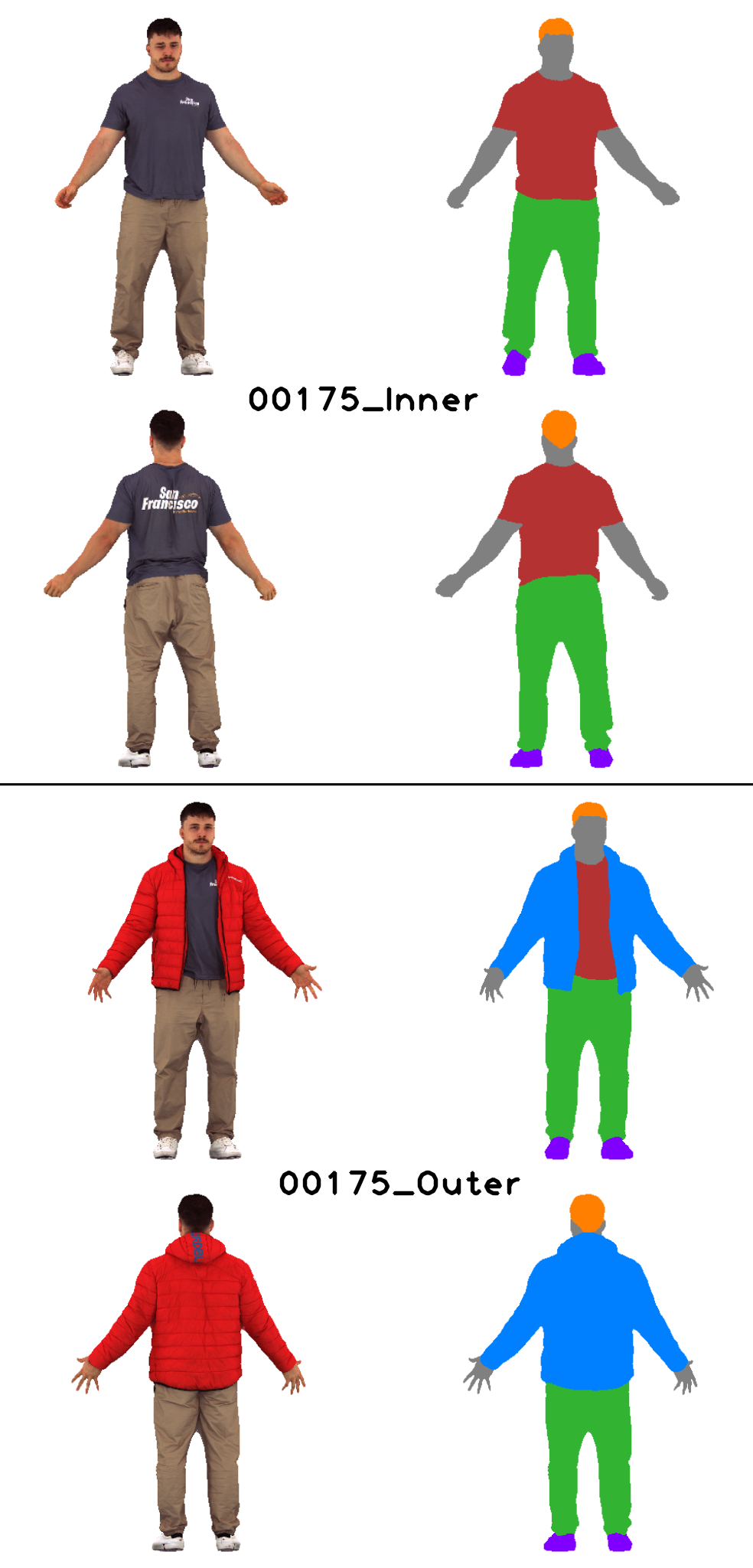

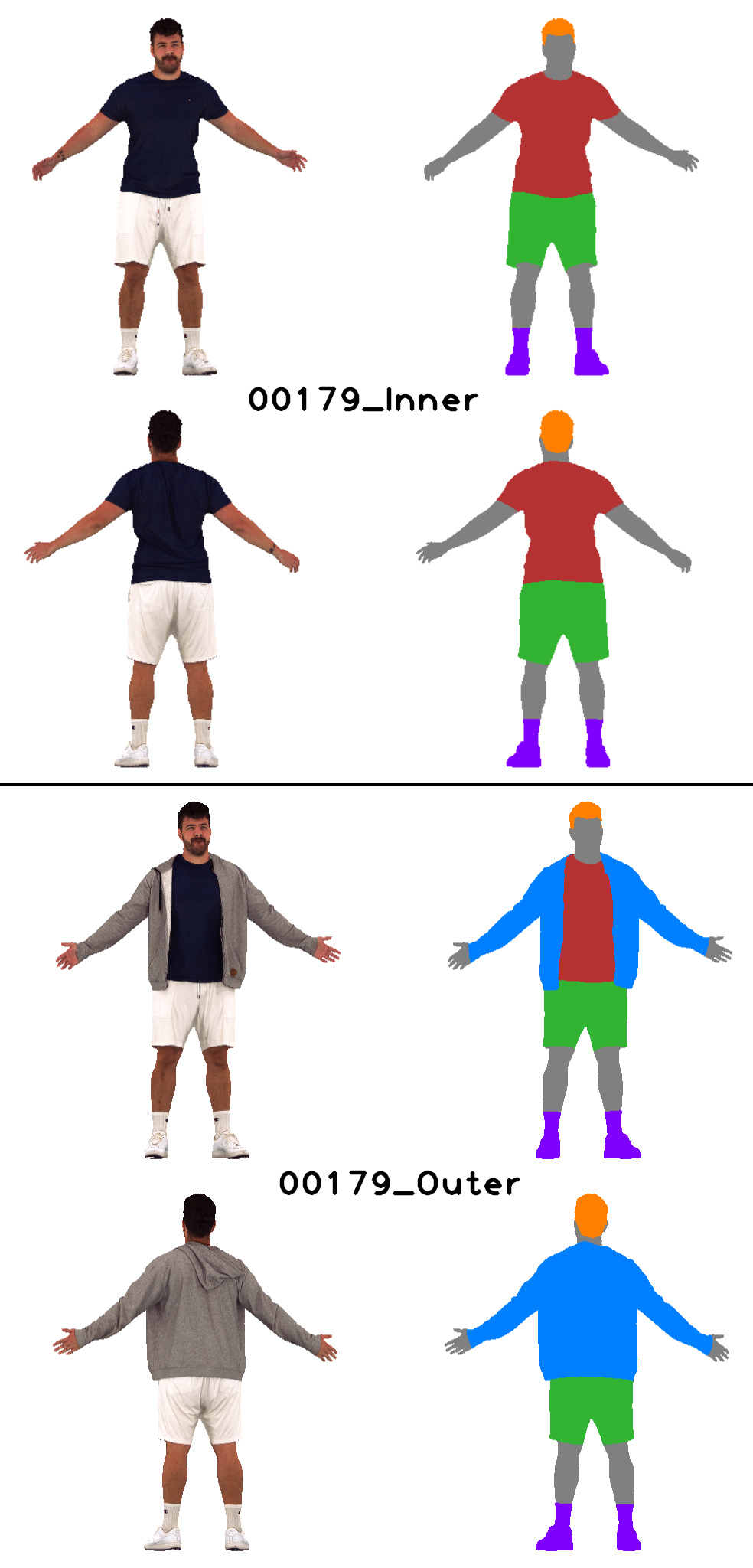

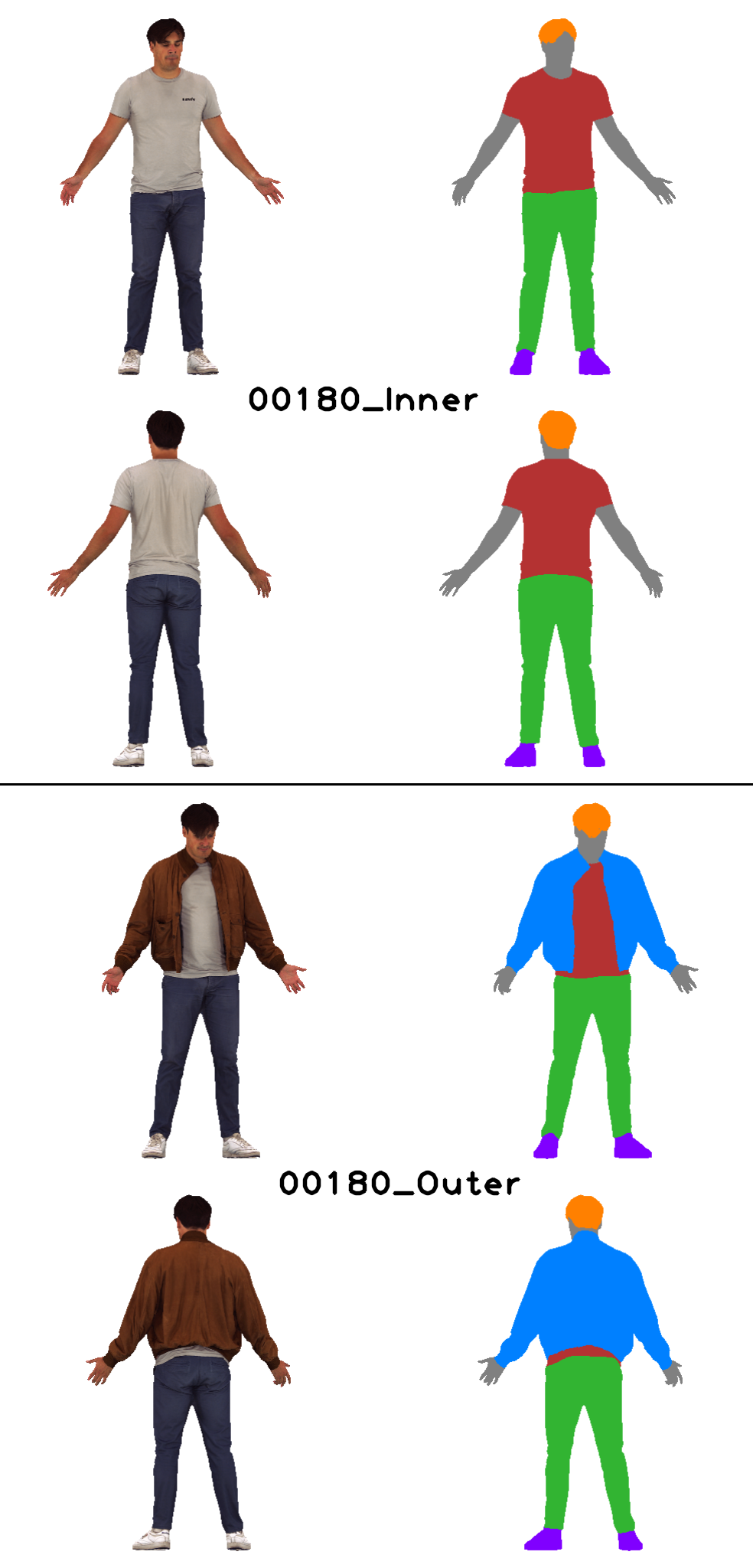

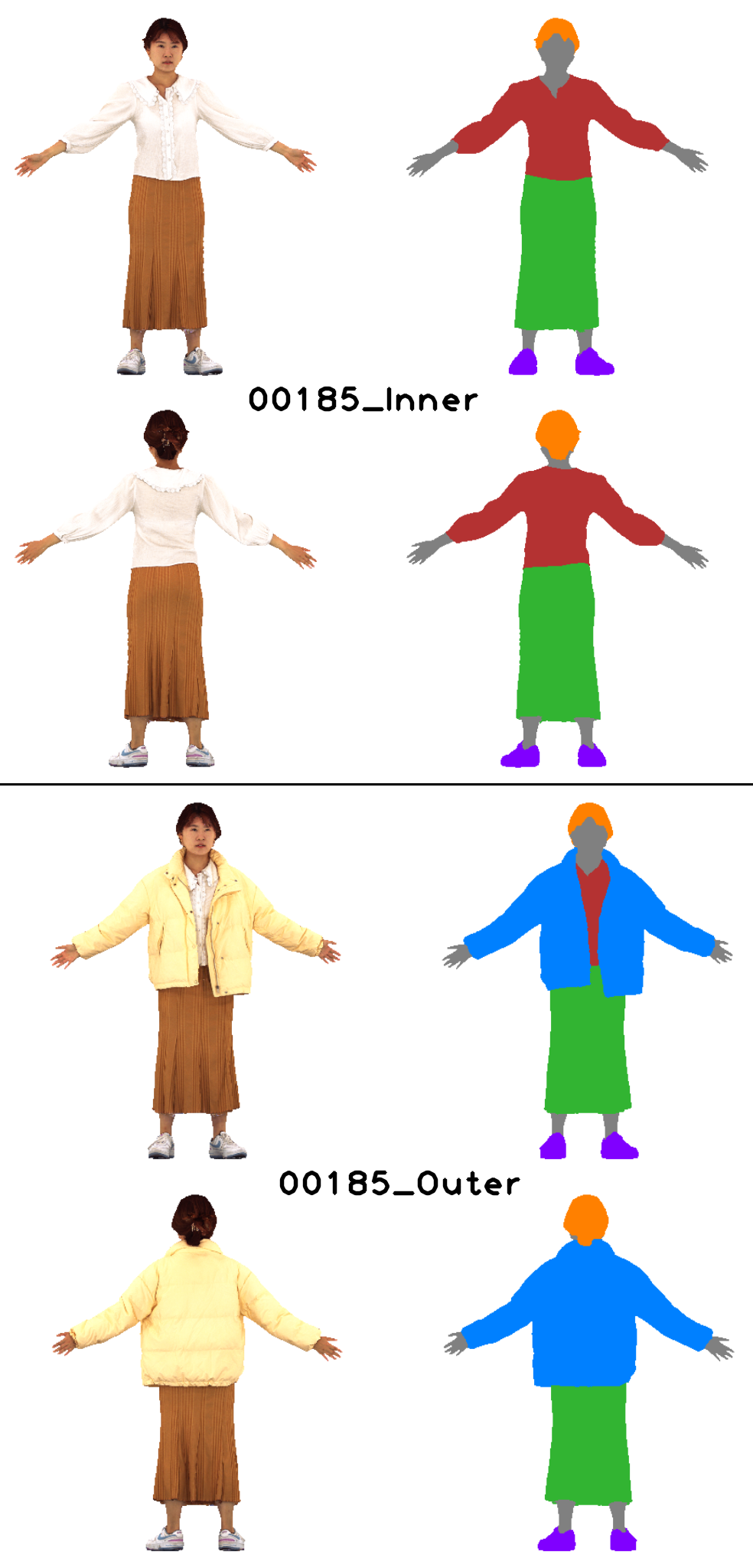

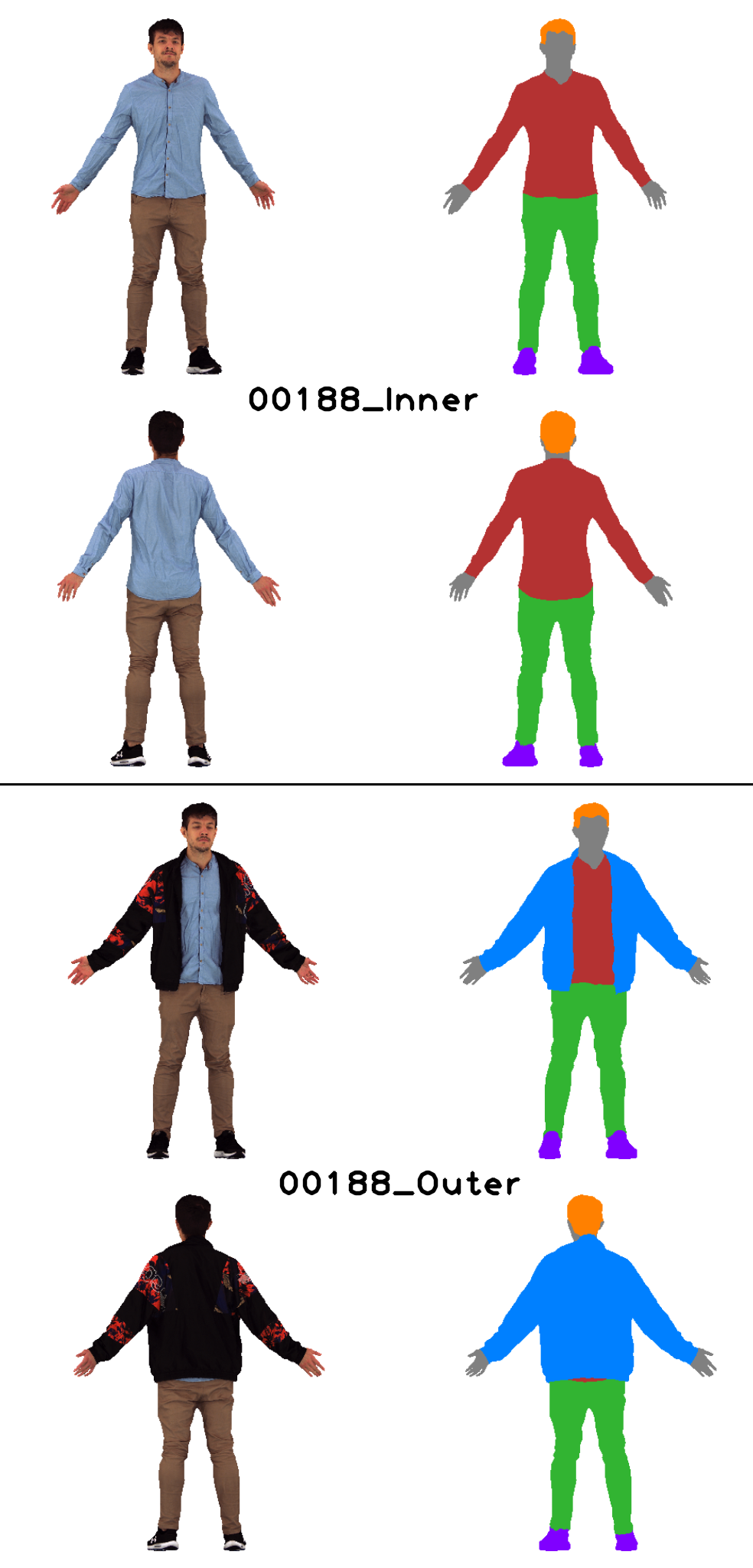

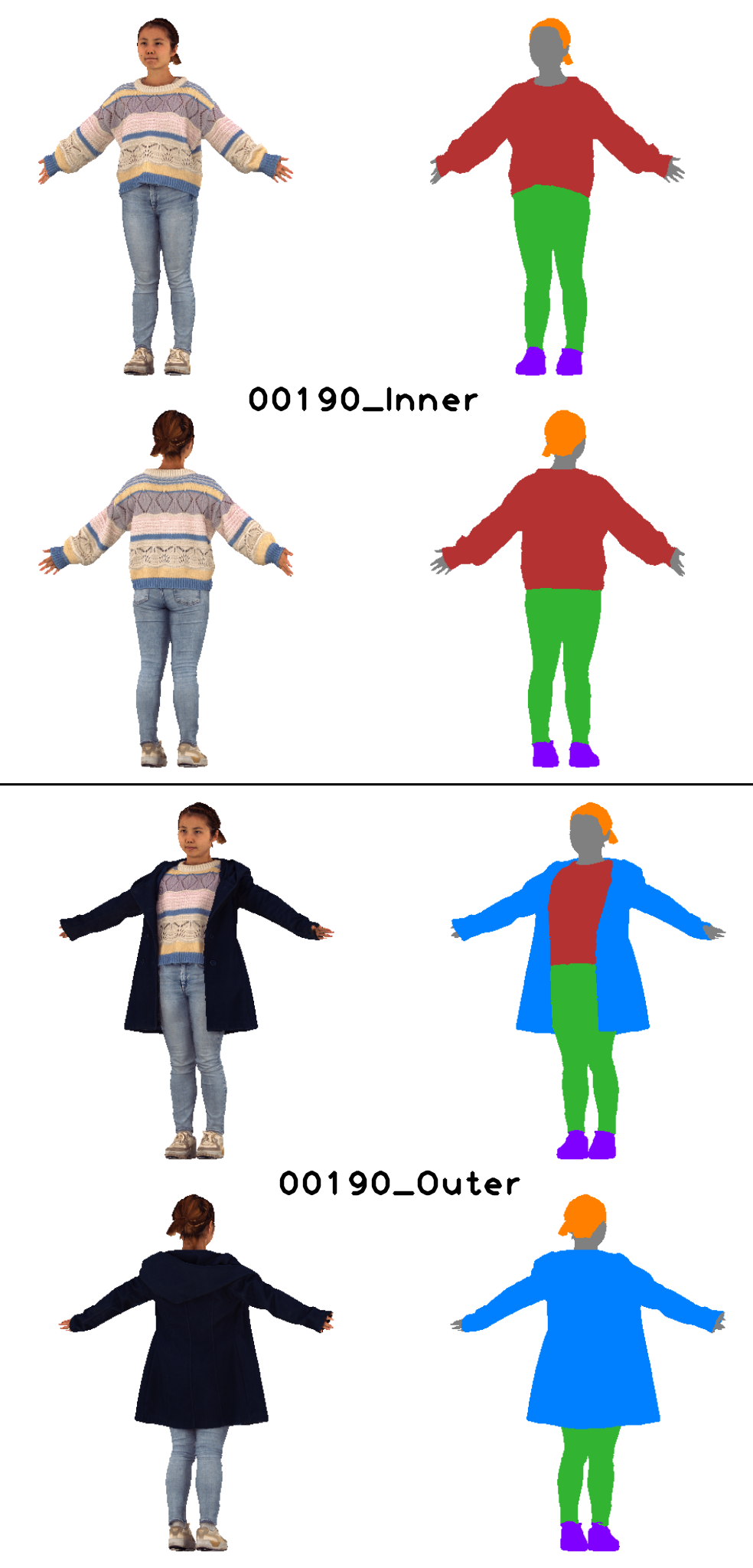

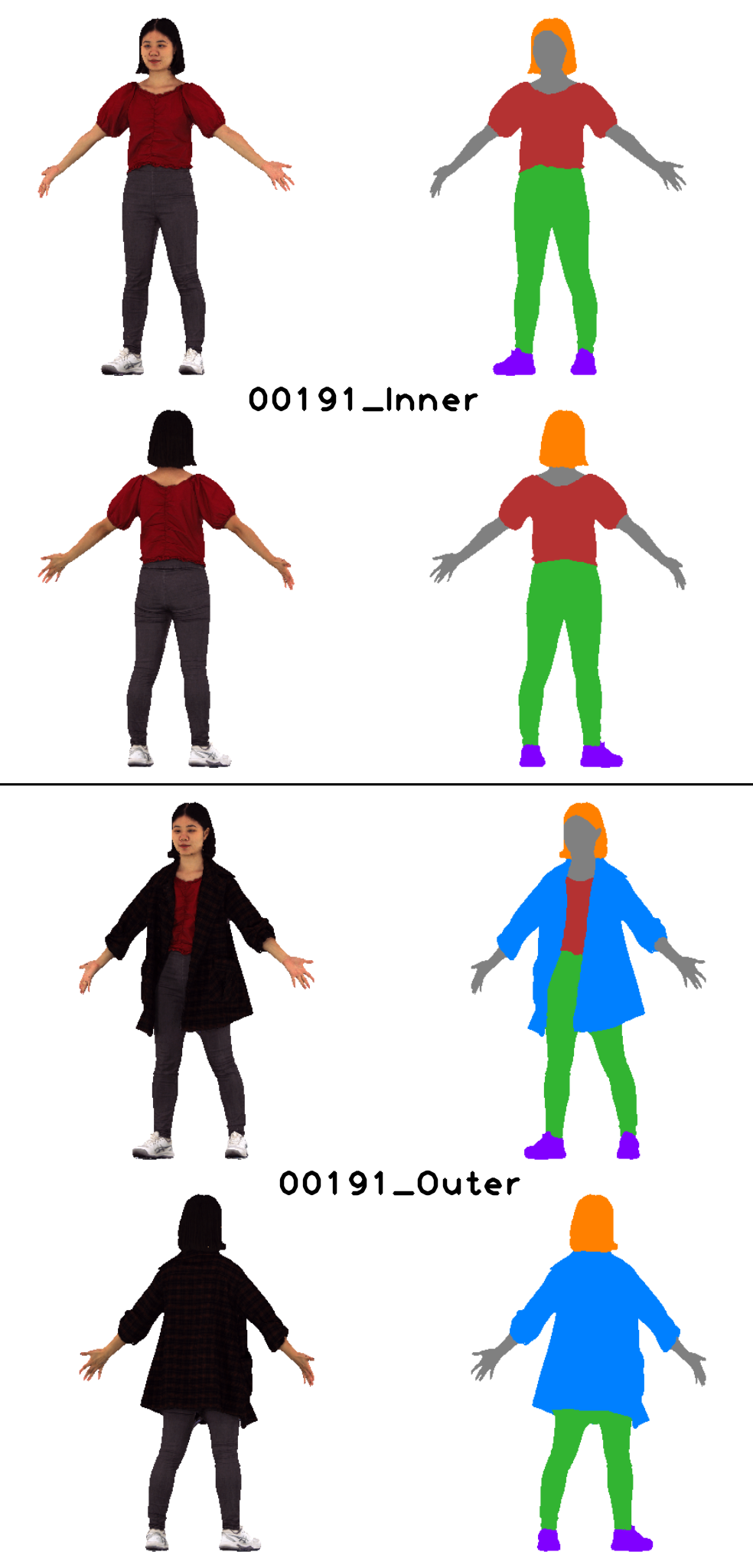

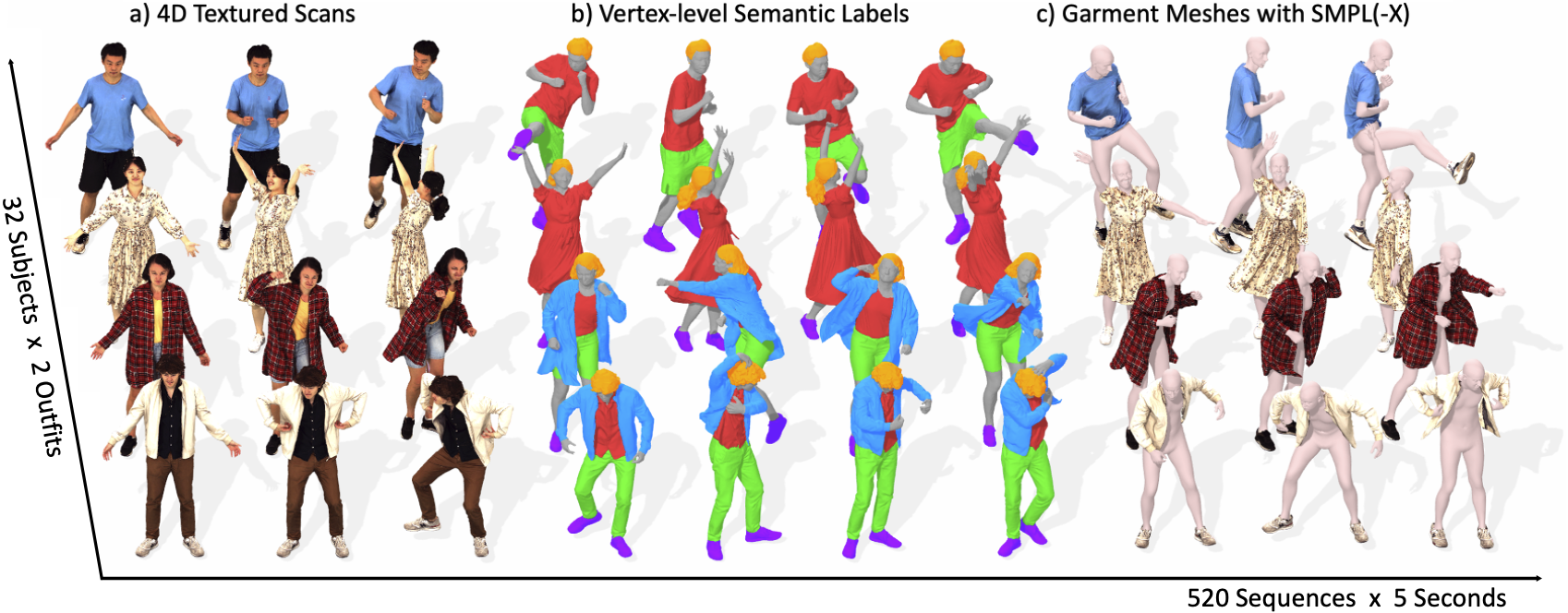

Dataset Overview. 4D-DRESS is the first real-world 4D dataset of human clothing, capturing 64 human outfits in more than 520 motion sequences. These sequences include a) high-quality 4D textured scans; for each scan, we annotate b) vertex-level semantic labels, thereby obtaining c) the corresponding garment meshes and fitted SMPL(-X) body meshes. Totally, 4D-DRESS captures dynamic motions of 4 dresses, 28 lower, 30 upper, and 32 outer garments. For each garment, we also provide its canonical template mesh to benefit the future human clothing study.