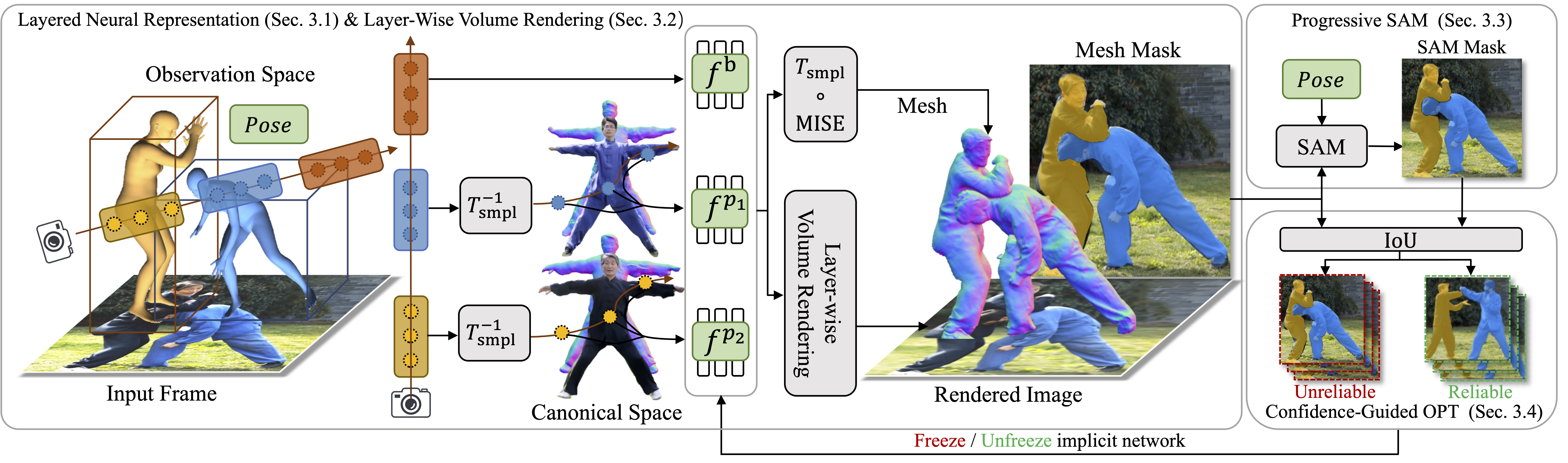

We present MultiPly, a novel framework to reconstruct multiple people in 3D from monocular in-the-wild videos. Reconstructing multiple individuals moving and interacting naturally from monocular in-the-wild videos poses a challenging task. Addressing it necessitates precise pixel-level disentanglement of individuals without any prior knowledge about the subjects. Moreover, it requires recovering intricate and complete 3D human shapes from short video sequences, intensifying the level of difficulty. To tackle these challenges, we first define a layered neural representation for the entire scene, composited by individual human and background models. We learn the layered neural representation from videos via our layer-wise differentiable volume rendering. This learning process is further enhanced by our hybrid instance segmentation approach which combines the self-supervised 3D segmentation and the promptable 2D segmentation module, yielding reliable instance segmentation supervision even under close human interaction. A confidence-guided optimization formulation is introduced to optimize the human poses and shape/appearance alternately. We incorporate effective objectives to refine human poses via photometric information and impose physically plausible constraints on human dynamics, leading to temporally consistent 3D reconstructions with high fidelity. The evaluation of our method shows the superiority over prior art on publicly available datasets and in-the-wild videos.

Given an image and SMPL estimation, we sample human points along the camera ray based on the bounding boxes of SMPL bodies and the background points based on NeRF++. We warp sampled human points into canonical space via inverse warping and evaluate the person-specific implicit network to obtain the SDF and radiance values. The layer-wise volume rendering is then applied to learn the implicit networks from images. We build a closed-loop refinement for instance segmentation by dynamically updating prompts for SAM using evolving human models. Finally, we formulate a confidence-guided optimization that only optimizes pose parameters for unreliable frames and jointly optimizes pose and implicit networks for reliable frames.

Our method generates complete human shapes with sharp boundaries and spatially coherent 3D reconstructions and outperforms existing state-of-the-art methods.

Our method generalizes to various people with different human shapes and miscellaneous clothing styles and performs robustly against different levels of occlusions, close human interaction, and environmental visual complexities.

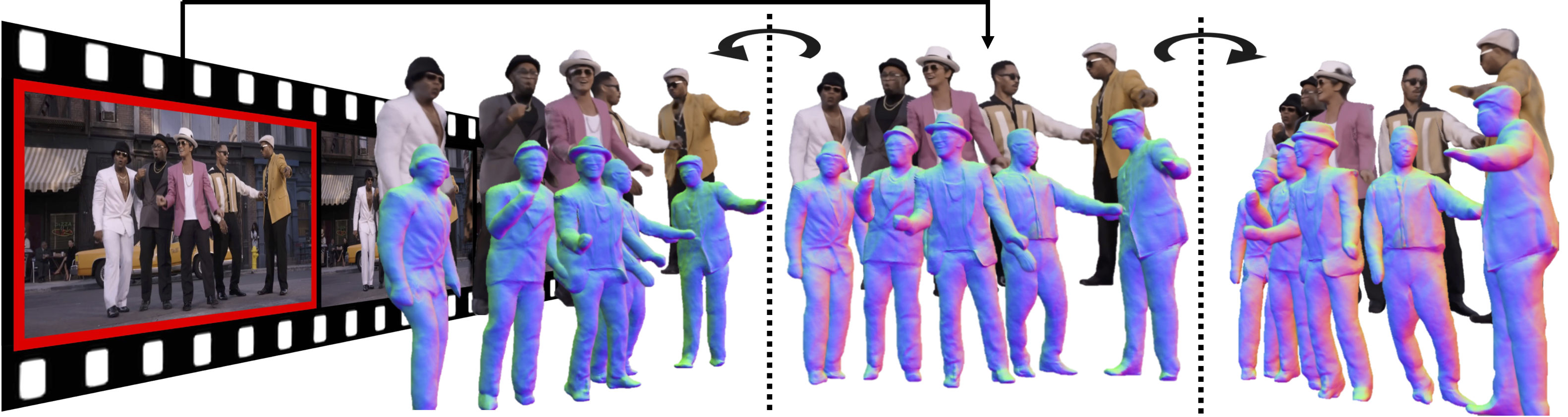

The reconstructed 3D Mesh can be viewed from different angle.

In order to evaluate the generalization of our method, we collect a dataset called Monocular Multi-huMan (MMM) by using a hand-held smartphone, which contains six sequences with two to four persons in each sequence. Half of the sequences are captured in the stage with ground truth annotations for quantitative evaluation and the others are captured in the wild for qualitative evaluation. Please fill out the MMM Application Form to access MMM dataset. We will send you an email with more information after approval of your application.

This work was partially supported by the Swiss SERI Consolidation Grant "AI-PERCEIVE". Chen Guo was supported by Microsoft Research Swiss JRC Grant. We thank Juan Zarate for proofreading and insightful discussion. We also want to express our gratitude to participants of our dataset. We use AITViewer for 2D/3D visualizations. All experiments were performed on the ETH Zürich Euler cluster.

@inproceedings{multiply,

title={MultiPly: Reconstruction of Multiple People from Monocular Video in the Wild},

author={Jiang, Zeren and Guo, Chen and Kaufmann, Manuel and Jiang, Tianjian and Valentin, Julien and Hilliges, Otmar and Song, Jie},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

}