We present EMDB, the Electromagnetic Database of Global 3D Human Pose and Shape in the Wild. EMDB is a novel dataset that contains high-quality 3D SMPL pose and shape parameters with global body and camera trajectories for in-the-wild videos. We use body-worn, wireless electromagnetic (EM) sensors and a hand-held iPhone to record a total of 58 minutes of motion data, distributed over 81 indoor and outdoor sequences and 10 participants. Together with accurate body poses and shapes, we also provide global camera poses and body root trajectories. To construct EMDB, we propose a multi-stage optimization procedure, which first fits SMPL to the 6-DoF EM measurements and then refines the poses via image observations. To achieve high-quality results, we leverage a neural implicit avatar model to reconstruct detailed human surface geometry and appearance, which allows for improved alignment and smoothness via a dense pixel-level objective. Our evaluations, conducted with a multi-view volumetric capture system, indicate that EMDB has an expected accuracy of 2.3 cm positional and 10.6 degrees angular error, surpassing the accuracy of previous in-the-wild datasets. We evaluate existing state-of-the-art monocular RGB methods for camera-relative and global pose estimation on EMDB.

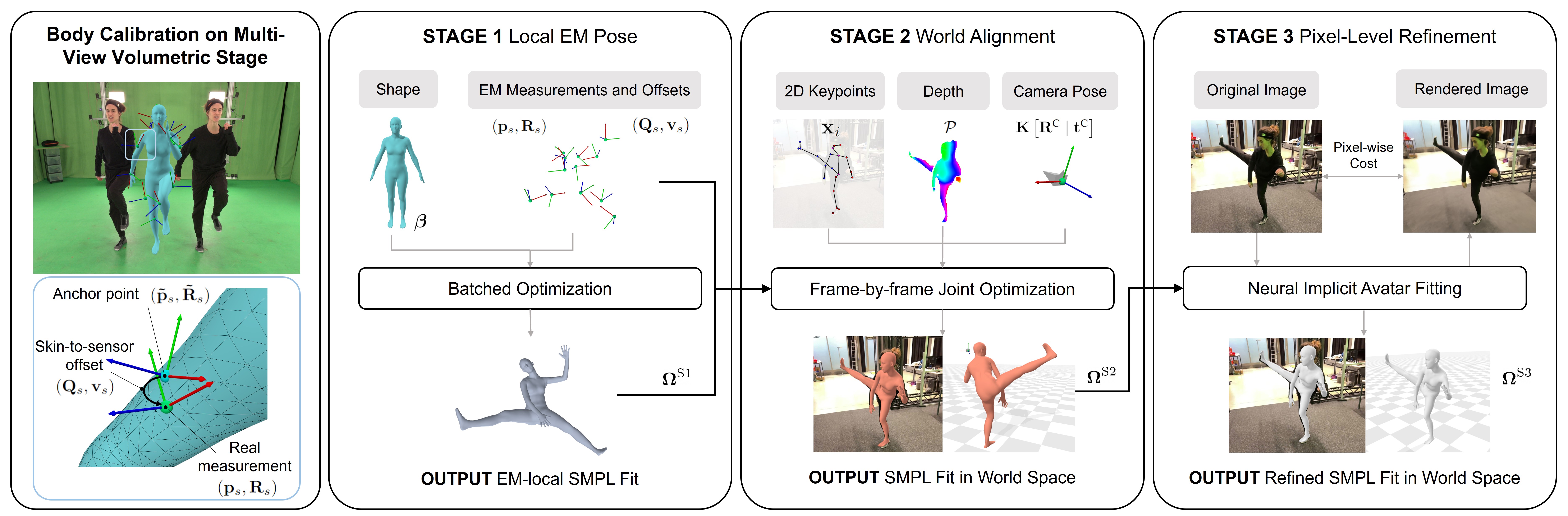

We first scan a subject in minimal clothing with a multi-view volumetric capture system to obtain their reference shape parameters and calibrate subject-specific skin-to-sensor offsets in regular clothing (left). We subsequently fit SMPL to in-the-wild data with a multi-stage optimization pipeline. Stage 1 fits SMPL to the EM measurements in EM-local space leveraging the calibrated body shape and skin-to-sensor offsets. Stage 2 aligns the local fit with the world, by jointly optimizing over 2D keypoints, depth, camera poses, EM measurements, and the output of stage 1. Stage 3 then refines the output of stage 2 by fitting a neural implicit body model with detailed geometry and appearance to the RGB images via a pixel-level supervision signal to boost smoothness and image-to-pose alignment.

EMDB contains SMPL poses with global body root and camera trajectories. In the following we show some samples taken from EMDB.

To download EMDB, please fill out the online application form. After approval of your application, you will receive an e-mail with instructions how to download the data.

We thank Robert Wang, Emre Aksan, Braden Copple, Kevin Harris, Mishael Herrmann, Mark Hogan, Stephen Olsen, Lingling Tao, Christopher Twigg, and Yi Zhao for their support. Thanks to Dean Bakker, Andrew Searle, and Stefan Walter for their help with our infrastructure. Thanks to Marek, developer of record3d, for his help with the app. Thanks to Laura Wülfroth and Deniz Yildiz for their assistance with capture. Thanks to Dario Mylonopoulos for his priceless work on the aitviewer which we used extensively in this work. All visualizations were done with aitviewer. We are grateful to all our participants for their valued contribution to this research. Computations were carried out in part on the ETH Euler cluster.

If you have any questions, please open an issue on GitHub or contact Manuel Kaufmann.

@inproceedings{kaufmann2023emdb,

author = {Kaufmann, Manuel and Song, Jie and Guo, Chen and Shen, Kaiyue and Jiang, Tianjian and Tang, Chengcheng and Z{\'a}rate, Juan Jos{\'e} and Hilliges, Otmar},

title = {{EMDB}: The {E}lectromagnetic {D}atabase of {G}lobal 3{D} {H}uman {P}ose and {S}hape in the {W}ild},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2023}

}

Data acquisition, development of the processing pipeline and all experiments have been performed entirely at ETH Zurich. Meta did not obtain any data or code prior to publication and did not conduct any experiments in this work.