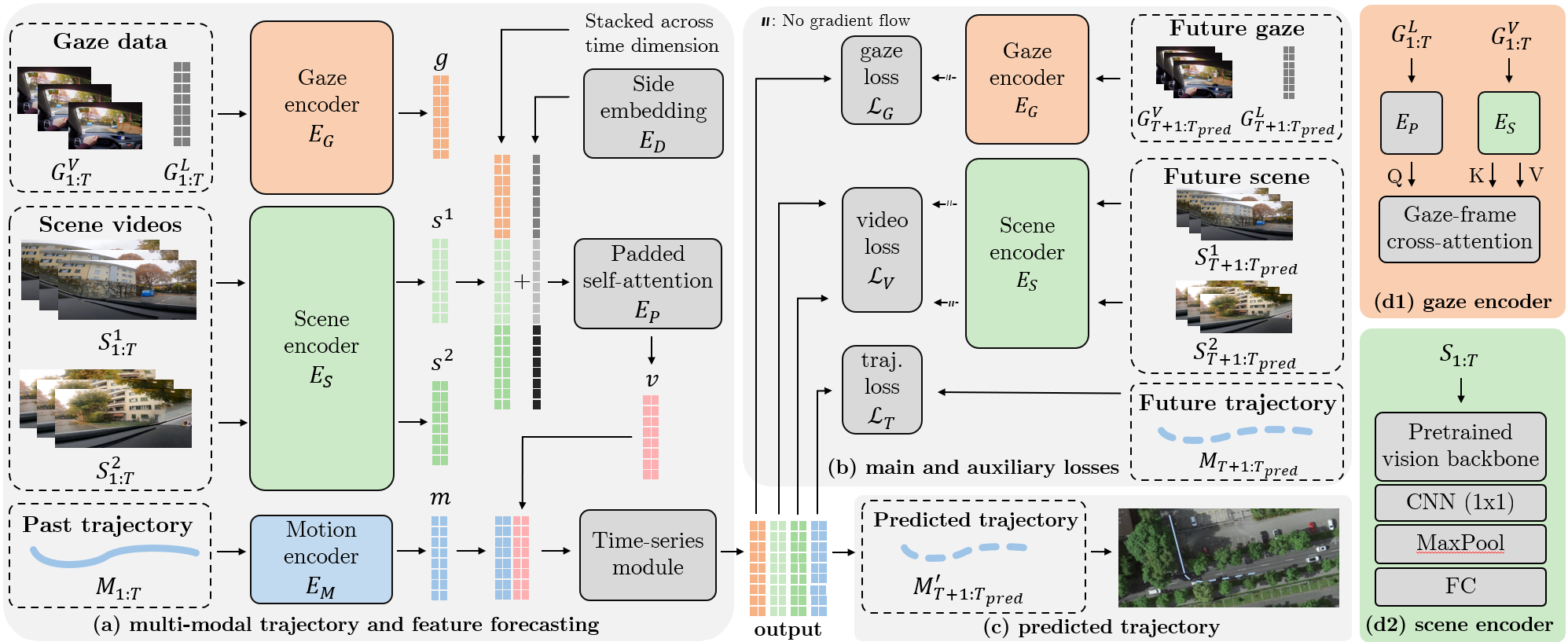

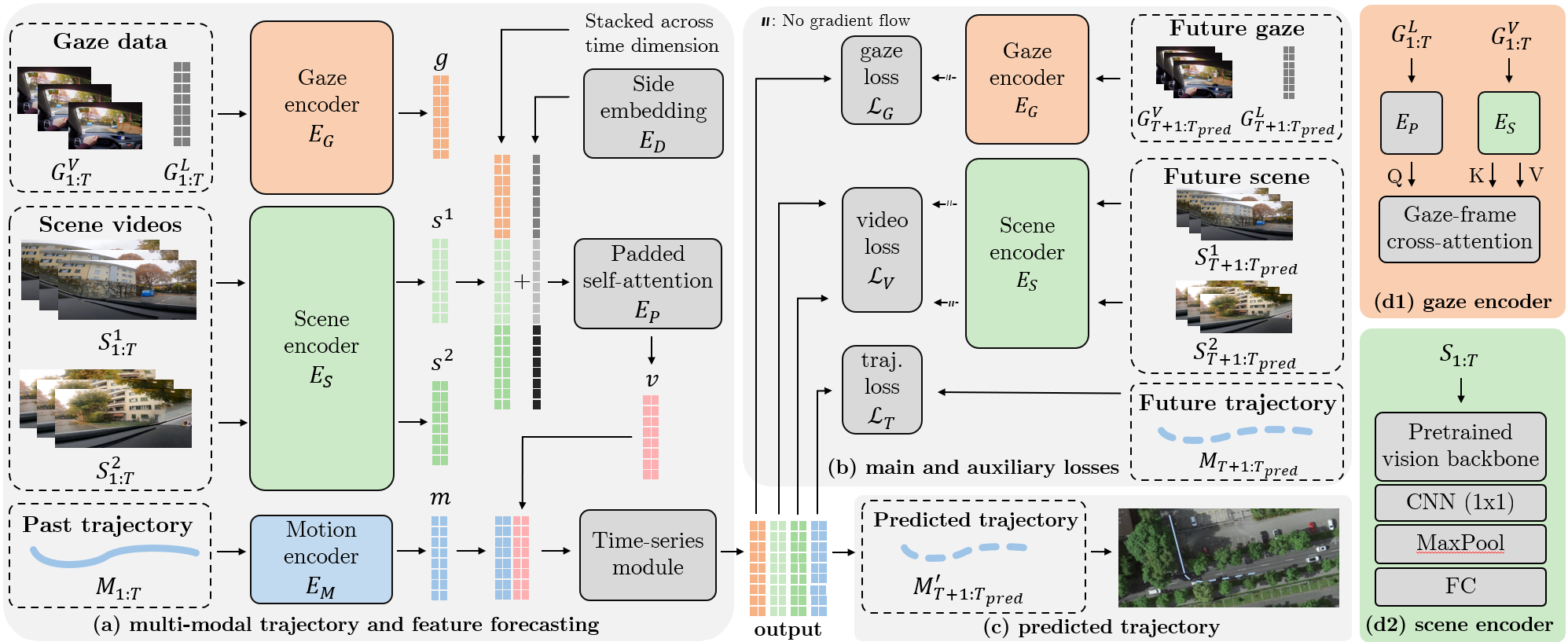

Understanding the decision-making process of drivers is one of the keys to ensuring road safety. While the driver intent and the resulting ego-motion trajectory is valuable in developing driver-assistance systems, existing methods mostly focus on the motions of other vehicles. In contrast, we focus on inferring the ego-trajectory of a driver's vehicle using their gaze data. For this purpose, we first collect a new dataset, GEM, which contains high-fidelity ego-motion videos paired with drivers' eye-tracking data and GPS coordinates. Next, we develop G-MEMP, a novel multimodal ego-trajectory prediction network that combines GPS and video input with gaze data. We also propose a new metric called Path Complexity Index (PCI) to measure the trajectory complexity. We perform extensive evaluations of the proposed method on both GEM and DR(eye)VE, an existing benchmark dataset. The results show that G-MEMP significantly outperforms state-of-the-art methods in both benchmarks. Furthermore, ablation studies demonstrate over 20% improvement in average displacement using gaze data, particularly in challenging driving scenarios with a high PCI. The data, code, and models will be made public.

@misc{akbiyik2023gmemp,

title={G-MEMP: Gaze-Enhanced Multimodal Ego-Motion Prediction in Driving},

author={M. Eren Akbiyik and Nedko Savov and Danda Pani Paudel and Nikola Popovic and Christian Vater and Otmar Hilliges and Luc Van Gool and Xi Wang},

year={2023},

eprint={2312.08558},

archivePrefix={arXiv},

primaryClass={cs.CV}

}