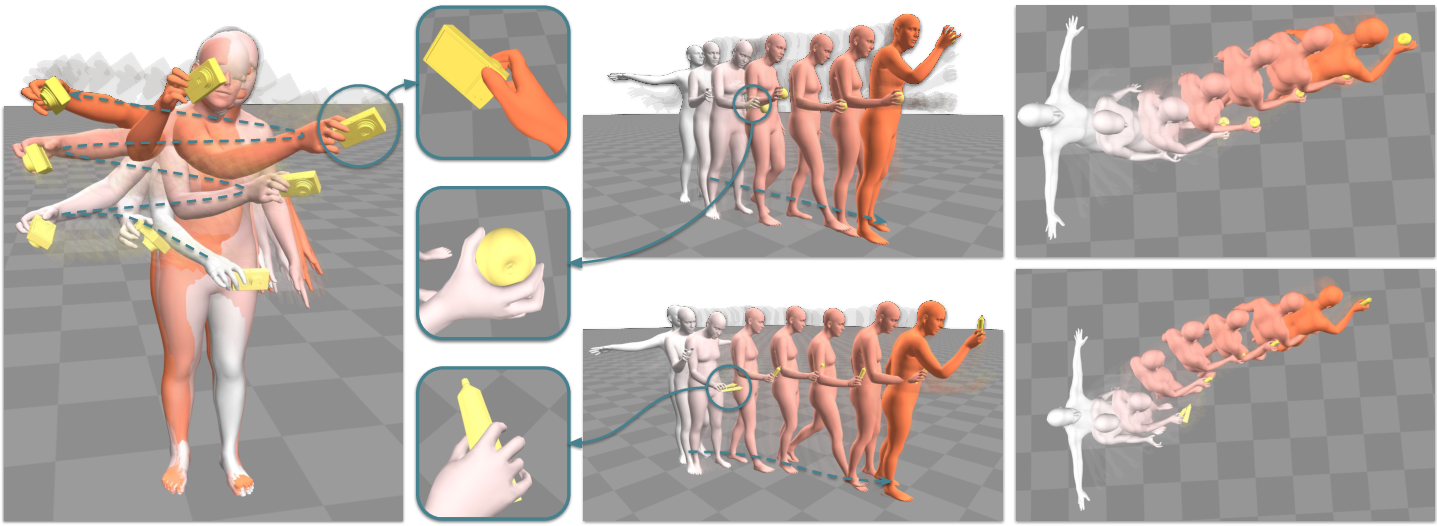

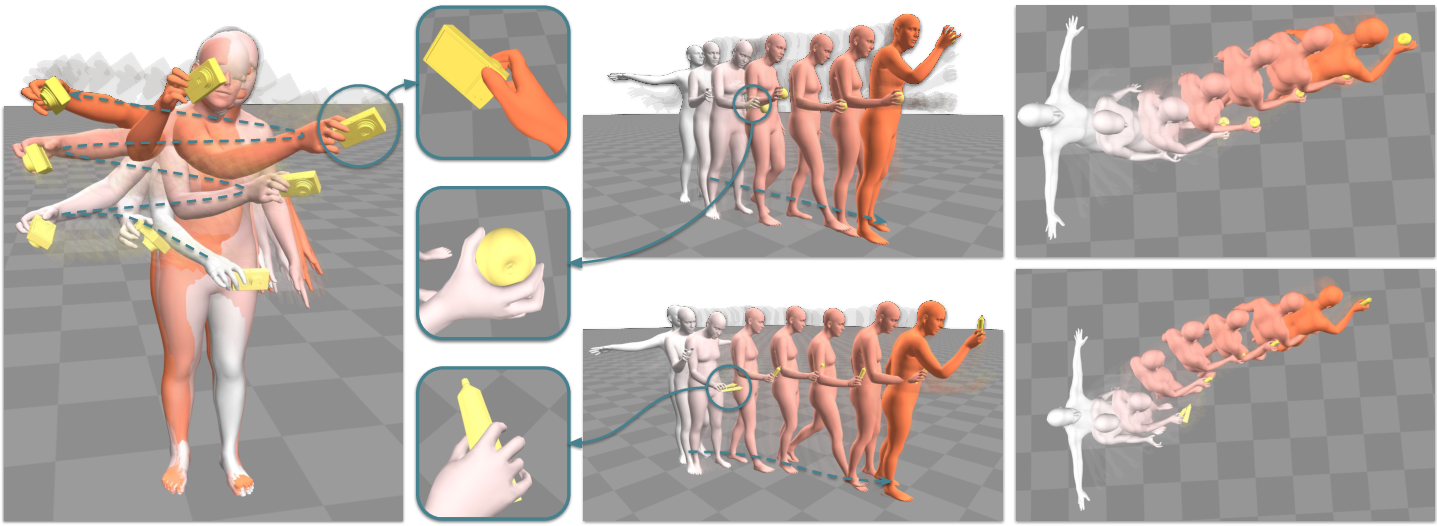

We propose a physics-based method for synthesizing dexterous hand-object interactions in a full-body setting. While recent advancements have addressed specific facets of human-object interactions, a comprehensive physics-based approach remains a challenge. Existing methods often focus on isolated segments of the interaction process and rely on data-driven techniques that may result in artifacts. In contrast, our proposed method embraces reinforcement learning (RL) and physics simulation to mitigate the limitations of data-driven approaches. Through a hierarchical framework, we first learn skill priors for both body and hand movements in a decoupled setting. The generic skill priors learn to decode a latent skill embedding into the motion of the underlying part. A high-level policy then controls hand-object interactions in these pretrained latent spaces, guided by task objectives of grasping and 3D target trajectory following. It is trained using a novel reward function that combines an adversarial style term with a task reward, encouraging natural motions while fulfilling the task incentives. Our method successfully accomplishes the complete interaction task, from approaching an object to grasping and subsequent manipulation. We compare our approach against kinematics-based baselines and show that it leads to more physically plausible motions.

@inproceedings{braun2023physically,

title={Physically Plausible Full-Body Hand-Object Interaction Synthesis},

author={Jona Braun and Sammy Christen and Muhammed Kocabas and Emre Aksan and Otmar Hilliges},

booktitle={International Conference on 3D Vision (3DV)},

year={2024}

}