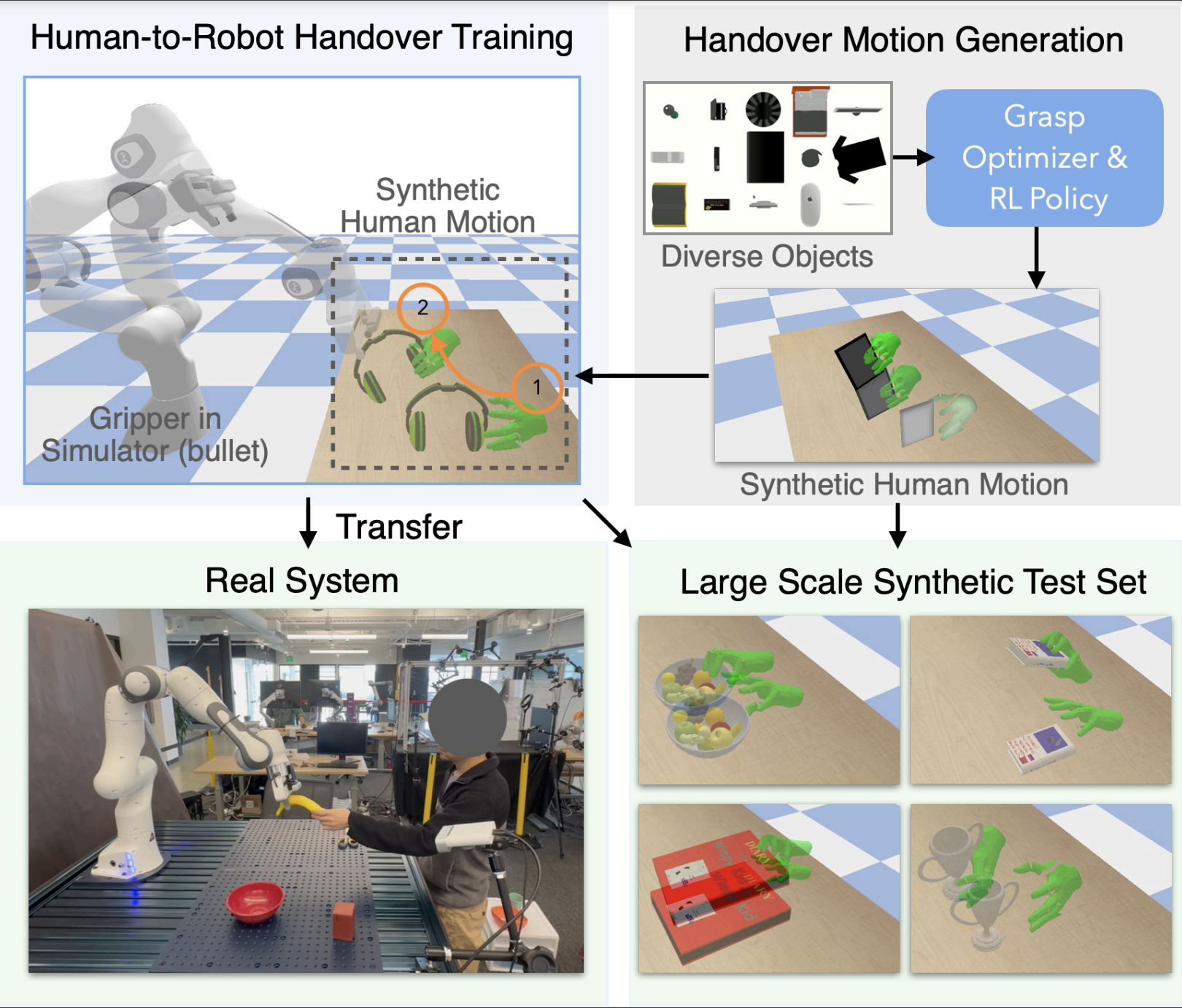

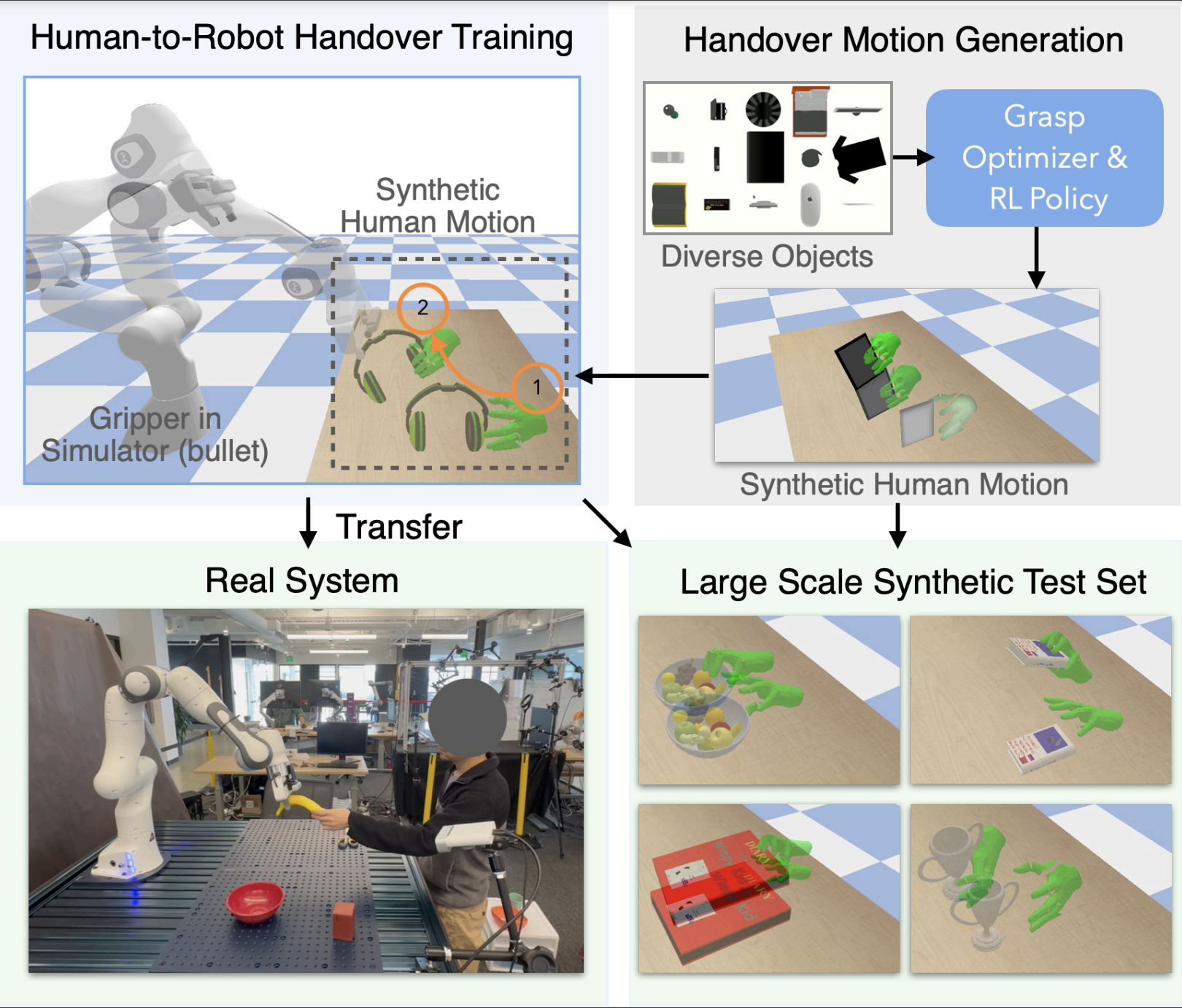

Vision-based human-to-robot handover is an important and challenging task in human-robot interaction. Recent work has attempted to train robot policies by interacting with dynamic virtual humans in simulated environments, where the policies can later be transferred to the real world. However, a major bottleneck is the reliance on human motion capture data, which is expensive to acquire and difficult to scale to arbitrary objects and human grasping motions. In this paper, we introduce a framework that can generate plausible human grasping motions suitable for training the robot. To achieve this, we propose a hand-object synthesis method that is designed to generate handover-friendly motions similar to humans. This allows us to generate synthetic training and testing data with 100x more objects than previous work. In our experiments, we show that our method trained purely with synthetic data is competitive with state-of-the-art methods that rely on real human motion data both in simulation and on a real system. In addition, we can perform evaluations on a larger scale compared to prior work. With our newly introduced test set, we show that our model can better scale to a large variety of unseen objects and human motions compared to the baselines.

@inproceedings{christen2023synh2r,

title={SynH2R: Synthesizing Hand-Object Motions for Learning Human-to-Robot Handovers},

author={Sammy Christen and Lan Feng and Wei Yang and Yu-Wei Chao and Otmar Hilliges and Jie Song},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2024}

}